(I'm a PhD student in applied math, I know some programming but admittedly I know **very** little about databases.)

My question is: *how do I tackle/handle this database?*

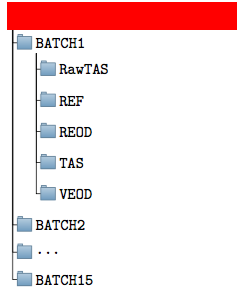

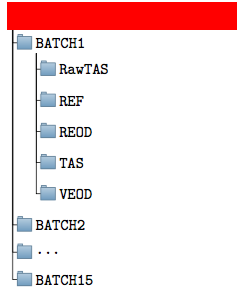

The problem is as follows. I have a "large" database (1 Tb). The top level maps are 15 batches which are named BATCH1 ... BATCH15 as follows:

The only relevant map is the RawTAS map (actually the TAS map, but RawTAS will do for the sake of this question)

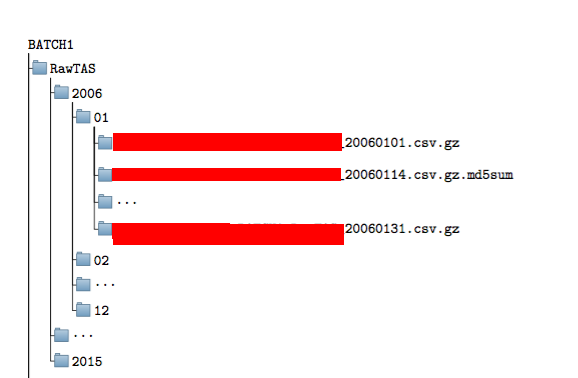

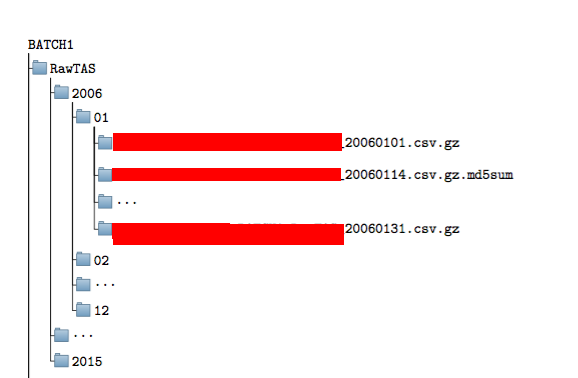

Going one level deeper we find the following directory structure

The only relevant map is the RawTAS map (actually the TAS map, but RawTAS will do for the sake of this question)

Going one level deeper we find the following directory structure

Each RawTAS map has a year folder going from 2006 to 2015 and then each year folder has monthly folders going from 01 to 12. Each monthly folder now contains a bunch of zipped csv files. The issue is that when I exctract these zip files they become insanely large so unzipping all of them is infeasible.

What would be the proper way of handling this kind of database?

Each RawTAS map has a year folder going from 2006 to 2015 and then each year folder has monthly folders going from 01 to 12. Each monthly folder now contains a bunch of zipped csv files. The issue is that when I exctract these zip files they become insanely large so unzipping all of them is infeasible.

What would be the proper way of handling this kind of database?

The only relevant map is the RawTAS map (actually the TAS map, but RawTAS will do for the sake of this question)

Going one level deeper we find the following directory structure

The only relevant map is the RawTAS map (actually the TAS map, but RawTAS will do for the sake of this question)

Going one level deeper we find the following directory structure

Each RawTAS map has a year folder going from 2006 to 2015 and then each year folder has monthly folders going from 01 to 12. Each monthly folder now contains a bunch of zipped csv files. The issue is that when I exctract these zip files they become insanely large so unzipping all of them is infeasible.

What would be the proper way of handling this kind of database?

Each RawTAS map has a year folder going from 2006 to 2015 and then each year folder has monthly folders going from 01 to 12. Each monthly folder now contains a bunch of zipped csv files. The issue is that when I exctract these zip files they become insanely large so unzipping all of them is infeasible.

What would be the proper way of handling this kind of database?

Asked by Rainymood

(143 rep)

Mar 8, 2018, 10:17 AM

Last activity: Jun 19, 2025, 02:09 AM

Last activity: Jun 19, 2025, 02:09 AM