Best way for processing over 150 million rows MySql

0

votes

1

answer

1416

views

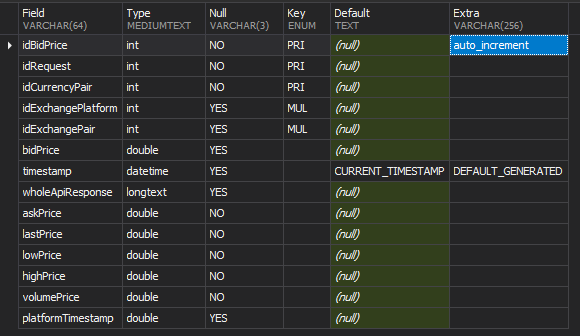

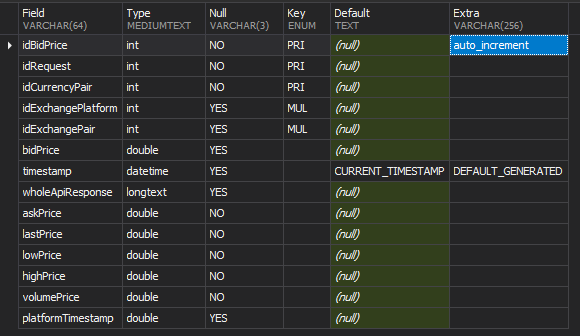

I wrote a function on nodejs to SELECT 150 million records, process the data and UPDATE those same 150 million records using

UPDATE 'table' SET value1='somevalue' WHERE idRo2 = 1;

Single Update for each row concatenate send one single query string allowing multipleStatements querys on the db connection.

I've being encountering multiple errors as

- Error: Connection lost: The server closed the connection.

- JavaScript heap out of memory.

- RangeError: Invalid string length.

- Killed process.

I think i might not be using the right technologies or programing technique

*Edit:

The data process i need to do is to take the 'wholeApiResponse' column value, parse that string and then insert the parsed values into new added columns (askPrice, lastPrice, lowPrice, highPrice, volumePrice, platformTimestamp), this end up modifying the existing row by adding new values from an existing string.

Asked by Christopher Martinez

(107 rep)

Mar 28, 2021, 10:41 PM

Last activity: Mar 29, 2021, 05:27 AM

Last activity: Mar 29, 2021, 05:27 AM