ETL staging area incremental load design considerations

0

votes

0

answers

837

views

We are currently working on a design for our new data warehouse. In our current data warehouse we have a 'persistent staging area' or PSA. We load new records incrementally into a staging table and then incrementally load the new data into the PSA table, so we have the complete set available in the DWH. We are going to use SAS on SQL Server if that would make any difference.

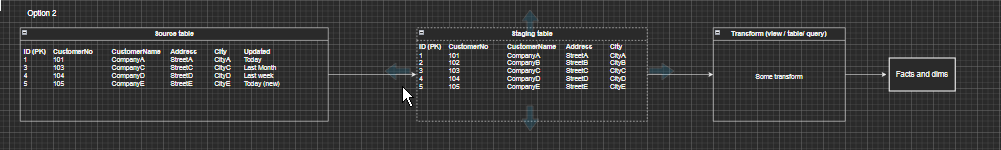

Now we are currently working out if we still want this 2-layer setup and thinking if moving to only 1 layer would be sufficient with the condition that we can load incrementally. There would be several approaches I think:

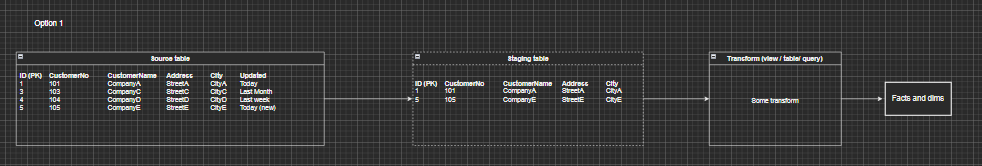

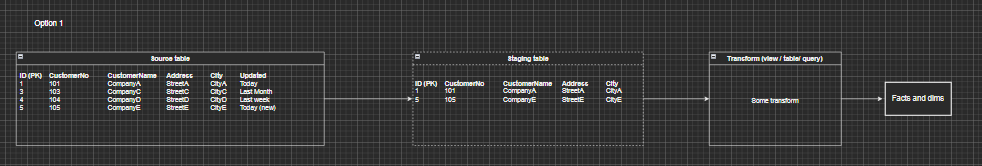

1. Have a single staging layer which truncates the staging table and then loads records incrementally into the end facts and dims. This is not possible, because if we go to the transform layer and we combine 2 tables (A and B), then you would get incorrect results. For example. Staging tables A and B combined results into table C with an INNER JOIN. If a new row is loaded in table B and now new row was updated that would be loaded into table A, then the LEFT OUTER JOIN would not load the row in table C if the left table is table A. You always need a full data set to achieve the correct result. Let alone the fact that we need some indexes for the transform layer. Or am I missing something here?

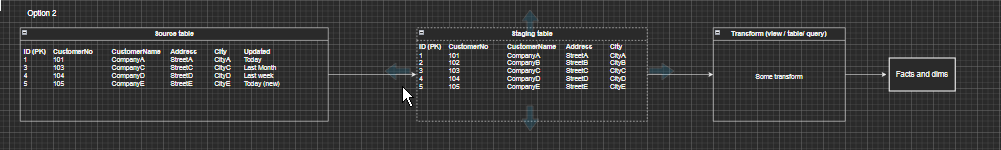

2. Have a single staging layer which does not truncate the table in advance. This way we keep all records from the source (even deleted ones, but that is another discussion). Now the problem is that for the transform layer we need indexes on this layer. So the extract would take more time, since indexes need to be updated as well. Therefore you put more pressure on the source system. We could drop them in advance, but I am not sure what would perform better. I think we need to test that. We also are required by our ERP vendor to use NOLOCK (long discussion, nevermind), so this would also result in more faulty records which we would need to filter out.

2. Have a single staging layer which does not truncate the table in advance. This way we keep all records from the source (even deleted ones, but that is another discussion). Now the problem is that for the transform layer we need indexes on this layer. So the extract would take more time, since indexes need to be updated as well. Therefore you put more pressure on the source system. We could drop them in advance, but I am not sure what would perform better. I think we need to test that. We also are required by our ERP vendor to use NOLOCK (long discussion, nevermind), so this would also result in more faulty records which we would need to filter out.

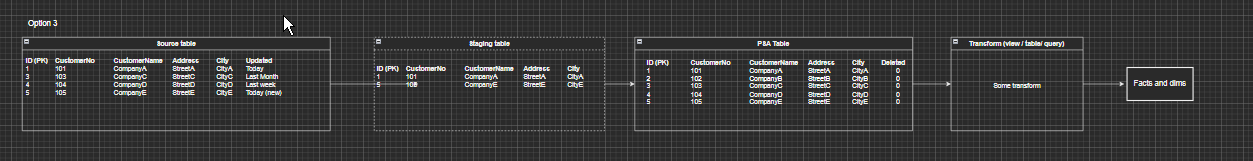

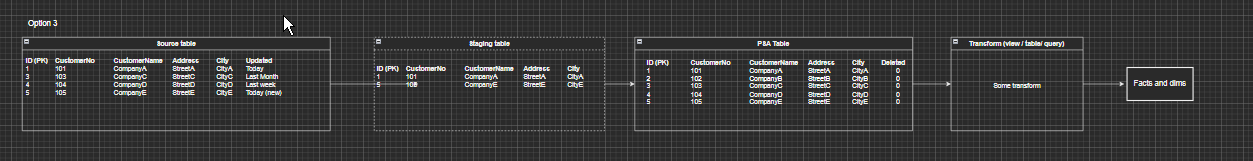

3. Use a 2 layer system as we use now. Layer 1 loads the new records incrementally, layer 2 is incrementally loaded with the new records from layer 1.

3. Use a 2 layer system as we use now. Layer 1 loads the new records incrementally, layer 2 is incrementally loaded with the new records from layer 1.

I don't think that option 1 and 2 are the way to go for us, but I really would like to know if I am missing something in this picture and/ or what your experiences are/ the staging setups you use and why. Would be very much appreciated.

I will try to give an example which would prevent me from truncating the staging tables. This use the situation where we would start building a new fact table to keep things simple. We have a source system, which has for example 1000 tables. The business requires some new report, which requires data from 20 of those tables. We start by writing a query that produces the end result in facts and dimensions. The query consists of a chain of CTEs that produces the end result. We do this first, because our current DWH is not user friendly and not easy to modify, hence we are migrating to a new DWH. After we developed the CTE query and the business agrees to the end result we now start building this in the data warehouse. We start by importing the 20 source tables with the required columns. We load them incrementally using a change date column. Next we create a PSA table where new records are loaded and changed records are updated daily. We then start to build our transform, which currently mostly consists of views containing joins, intermediate result tables for performance, unions, etc. This can be very complex. Now the end result of this is loaded in the fact. Truncate and load. This is currently faster than the logic our DWH tool uses to update facts and dims incrementally. Next we build the report. Now the business requires another mostly different report. We build another model using the same principals. This model also uses information of 5 of the tables we used by the first report. Now if we would not have the staging tables (staging layer 1 incremental, layer 2 PSA), but run the transform without the staging tables, the query runs directly on our source system. Which we do not want. Secondly, if data is changed in the 5 overlapping source tables, both queries would produce a different end result.

I hope the example makes the situation more clear.

I don't think that option 1 and 2 are the way to go for us, but I really would like to know if I am missing something in this picture and/ or what your experiences are/ the staging setups you use and why. Would be very much appreciated.

I will try to give an example which would prevent me from truncating the staging tables. This use the situation where we would start building a new fact table to keep things simple. We have a source system, which has for example 1000 tables. The business requires some new report, which requires data from 20 of those tables. We start by writing a query that produces the end result in facts and dimensions. The query consists of a chain of CTEs that produces the end result. We do this first, because our current DWH is not user friendly and not easy to modify, hence we are migrating to a new DWH. After we developed the CTE query and the business agrees to the end result we now start building this in the data warehouse. We start by importing the 20 source tables with the required columns. We load them incrementally using a change date column. Next we create a PSA table where new records are loaded and changed records are updated daily. We then start to build our transform, which currently mostly consists of views containing joins, intermediate result tables for performance, unions, etc. This can be very complex. Now the end result of this is loaded in the fact. Truncate and load. This is currently faster than the logic our DWH tool uses to update facts and dims incrementally. Next we build the report. Now the business requires another mostly different report. We build another model using the same principals. This model also uses information of 5 of the tables we used by the first report. Now if we would not have the staging tables (staging layer 1 incremental, layer 2 PSA), but run the transform without the staging tables, the query runs directly on our source system. Which we do not want. Secondly, if data is changed in the 5 overlapping source tables, both queries would produce a different end result.

I hope the example makes the situation more clear.

2. Have a single staging layer which does not truncate the table in advance. This way we keep all records from the source (even deleted ones, but that is another discussion). Now the problem is that for the transform layer we need indexes on this layer. So the extract would take more time, since indexes need to be updated as well. Therefore you put more pressure on the source system. We could drop them in advance, but I am not sure what would perform better. I think we need to test that. We also are required by our ERP vendor to use NOLOCK (long discussion, nevermind), so this would also result in more faulty records which we would need to filter out.

2. Have a single staging layer which does not truncate the table in advance. This way we keep all records from the source (even deleted ones, but that is another discussion). Now the problem is that for the transform layer we need indexes on this layer. So the extract would take more time, since indexes need to be updated as well. Therefore you put more pressure on the source system. We could drop them in advance, but I am not sure what would perform better. I think we need to test that. We also are required by our ERP vendor to use NOLOCK (long discussion, nevermind), so this would also result in more faulty records which we would need to filter out.

3. Use a 2 layer system as we use now. Layer 1 loads the new records incrementally, layer 2 is incrementally loaded with the new records from layer 1.

3. Use a 2 layer system as we use now. Layer 1 loads the new records incrementally, layer 2 is incrementally loaded with the new records from layer 1.

I don't think that option 1 and 2 are the way to go for us, but I really would like to know if I am missing something in this picture and/ or what your experiences are/ the staging setups you use and why. Would be very much appreciated.

I will try to give an example which would prevent me from truncating the staging tables. This use the situation where we would start building a new fact table to keep things simple. We have a source system, which has for example 1000 tables. The business requires some new report, which requires data from 20 of those tables. We start by writing a query that produces the end result in facts and dimensions. The query consists of a chain of CTEs that produces the end result. We do this first, because our current DWH is not user friendly and not easy to modify, hence we are migrating to a new DWH. After we developed the CTE query and the business agrees to the end result we now start building this in the data warehouse. We start by importing the 20 source tables with the required columns. We load them incrementally using a change date column. Next we create a PSA table where new records are loaded and changed records are updated daily. We then start to build our transform, which currently mostly consists of views containing joins, intermediate result tables for performance, unions, etc. This can be very complex. Now the end result of this is loaded in the fact. Truncate and load. This is currently faster than the logic our DWH tool uses to update facts and dims incrementally. Next we build the report. Now the business requires another mostly different report. We build another model using the same principals. This model also uses information of 5 of the tables we used by the first report. Now if we would not have the staging tables (staging layer 1 incremental, layer 2 PSA), but run the transform without the staging tables, the query runs directly on our source system. Which we do not want. Secondly, if data is changed in the 5 overlapping source tables, both queries would produce a different end result.

I hope the example makes the situation more clear.

I don't think that option 1 and 2 are the way to go for us, but I really would like to know if I am missing something in this picture and/ or what your experiences are/ the staging setups you use and why. Would be very much appreciated.

I will try to give an example which would prevent me from truncating the staging tables. This use the situation where we would start building a new fact table to keep things simple. We have a source system, which has for example 1000 tables. The business requires some new report, which requires data from 20 of those tables. We start by writing a query that produces the end result in facts and dimensions. The query consists of a chain of CTEs that produces the end result. We do this first, because our current DWH is not user friendly and not easy to modify, hence we are migrating to a new DWH. After we developed the CTE query and the business agrees to the end result we now start building this in the data warehouse. We start by importing the 20 source tables with the required columns. We load them incrementally using a change date column. Next we create a PSA table where new records are loaded and changed records are updated daily. We then start to build our transform, which currently mostly consists of views containing joins, intermediate result tables for performance, unions, etc. This can be very complex. Now the end result of this is loaded in the fact. Truncate and load. This is currently faster than the logic our DWH tool uses to update facts and dims incrementally. Next we build the report. Now the business requires another mostly different report. We build another model using the same principals. This model also uses information of 5 of the tables we used by the first report. Now if we would not have the staging tables (staging layer 1 incremental, layer 2 PSA), but run the transform without the staging tables, the query runs directly on our source system. Which we do not want. Secondly, if data is changed in the 5 overlapping source tables, both queries would produce a different end result.

I hope the example makes the situation more clear.

Asked by Niels Broertjes

(549 rep)

Nov 17, 2022, 09:30 AM

Last activity: Nov 18, 2022, 08:06 AM

Last activity: Nov 18, 2022, 08:06 AM