Azure SQL index rebuild after huge size reduction

2

votes

3

answers

559

views

We have a table in an Azure SQL database that used to have an nvarchar(max) column storing pdf files. (The wonders of external developers.) The table grew to 156 GB. It has 476000 rows.

After changing the logic, we no longer needed the pdf column. The only reasonable way to get rid of the data in it was to drop the column and recreate the column (in case some weird process was still referencing it).

However, the table size is still reported as 156 GB.

The backup table I just created (SELECT INTO) is 128 MB, so that seems to be the real size of the data.

I let an index rebuild (ONLINE) run overnight on the clustered PK index. It failed with a TCP error sometime between 8 and 12 hours. The index is still 95% fragmented, the size is still reported as 156 GB.

Is there an explanation why this is so eye-wateringly slow? **Is there a better way?** Production database, table is used by a website, has to be accessible, so can't do it OFFLINE unless it takes less than 10 minutes - which nobody can guarantee.

Can I just *build all the indexes on the backup table, drop the original table and rename the backup?* That sounds risky (small risk of losing a record created just the wrong time).

-----

I'm trying to make Azure realize it is no longer used. Allocated, I'm OK with that. Used, not so much:

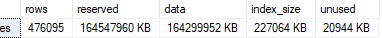

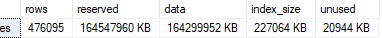

The table in question:

The table in question:

Again, it's not the reserved space that is the issue, it is the used space.

Again, it's not the reserved space that is the issue, it is the used space.

The table in question:

The table in question:

Again, it's not the reserved space that is the issue, it is the used space.

Again, it's not the reserved space that is the issue, it is the used space.

Asked by vacip

(133 rep)

Jan 13, 2024, 08:30 AM

Last activity: Jan 17, 2024, 01:16 PM

Last activity: Jan 17, 2024, 01:16 PM