How can I improve low IOPS performance for an Azure SQL Database Hyperscale elastic pool?

1

vote

1

answer

462

views

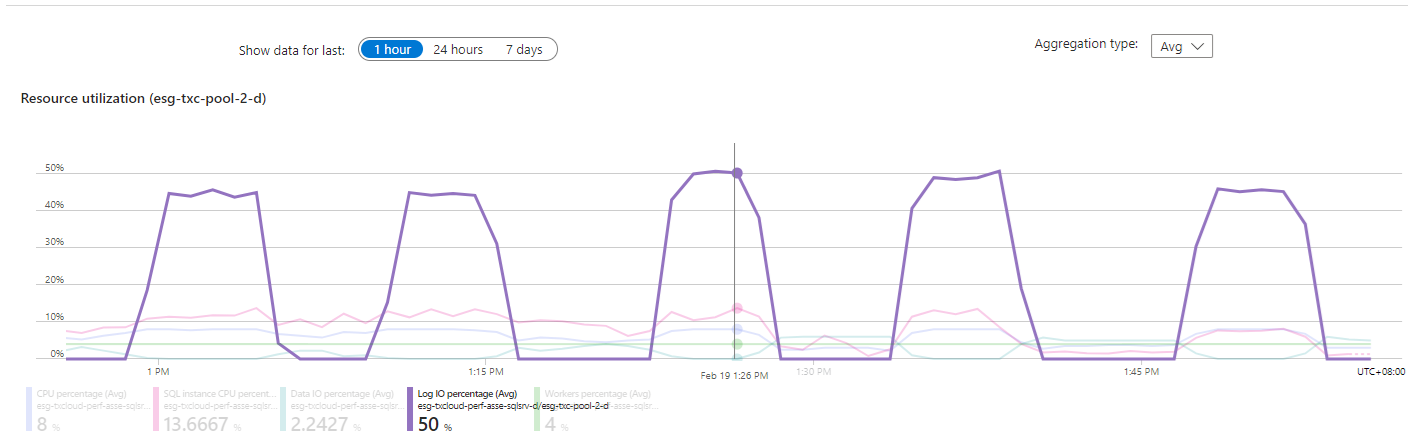

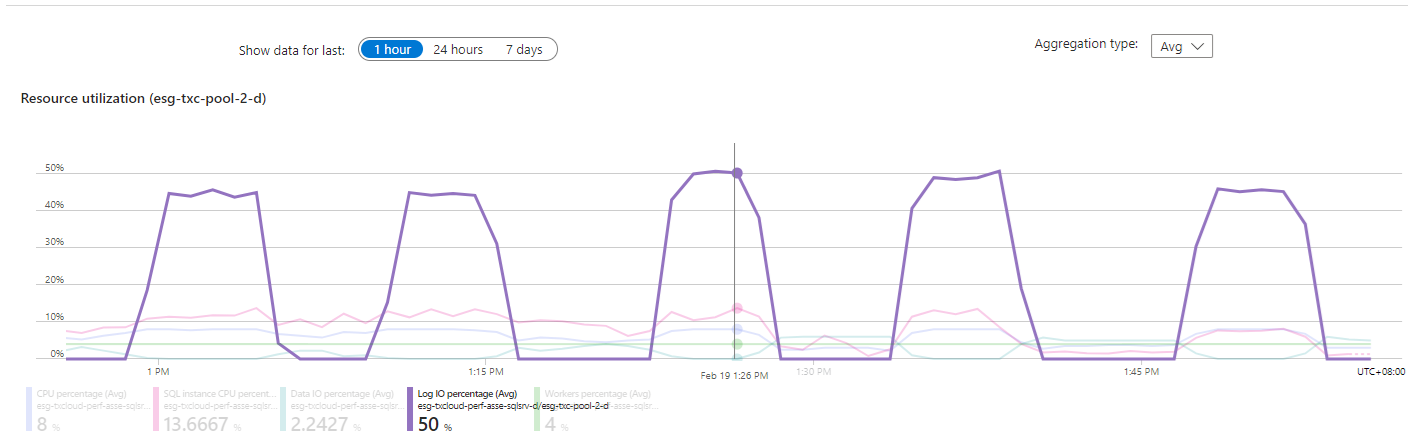

I'm doing performance testing a series of data migration scripts which mainly consist of large INSERTs (sometimes 100M-200M records) with often complex SELECTs with a lot of hash joins.

Configuration:

- **Hyperscale: Premium-series, 16 vCores**

- Max **4TB** of Locally-redundant backup storage, however for most of the tests only ~1TB was allocated

Problem: during one of the critical queries which takes circa 2 hours and performs a 100M+ insert on a heavily indexed table (7 indexes) there seem to be extremely wild swings in IOPS write performance:  I set **MAXDOP=16** manually for the query (1 DOP per vCPU)

Questions:

1. My best guess is that the IOPS gets severely degraded when the local SSD (presumably with a maximum of 2560 IOPS per vCore ) gets filled up and the severe bottleneck becomes the shared storage instance. Is this accurate? Note that in this case this happens roughly 50% of the time and virtually everything grinds down to a halt.

2. If this is the case, is there a way to calculate the effective minimum IOPS of the shared storage instance?

3. Is there a way to improve the minimum IOPS of the shared storage instance, e.g. by manually over-allocating the storage space? (this would be the standard approach for a lot of other configurations where e.g. you get 3 IOPS per GB)

4. How can I get the actual IOPS figures rather than %?

5. Any other suggestions/tips?

Thanks in advance

I set **MAXDOP=16** manually for the query (1 DOP per vCPU)

Questions:

1. My best guess is that the IOPS gets severely degraded when the local SSD (presumably with a maximum of 2560 IOPS per vCore ) gets filled up and the severe bottleneck becomes the shared storage instance. Is this accurate? Note that in this case this happens roughly 50% of the time and virtually everything grinds down to a halt.

2. If this is the case, is there a way to calculate the effective minimum IOPS of the shared storage instance?

3. Is there a way to improve the minimum IOPS of the shared storage instance, e.g. by manually over-allocating the storage space? (this would be the standard approach for a lot of other configurations where e.g. you get 3 IOPS per GB)

4. How can I get the actual IOPS figures rather than %?

5. Any other suggestions/tips?

Thanks in advance

I set **MAXDOP=16** manually for the query (1 DOP per vCPU)

Questions:

1. My best guess is that the IOPS gets severely degraded when the local SSD (presumably with a maximum of 2560 IOPS per vCore ) gets filled up and the severe bottleneck becomes the shared storage instance. Is this accurate? Note that in this case this happens roughly 50% of the time and virtually everything grinds down to a halt.

2. If this is the case, is there a way to calculate the effective minimum IOPS of the shared storage instance?

3. Is there a way to improve the minimum IOPS of the shared storage instance, e.g. by manually over-allocating the storage space? (this would be the standard approach for a lot of other configurations where e.g. you get 3 IOPS per GB)

4. How can I get the actual IOPS figures rather than %?

5. Any other suggestions/tips?

Thanks in advance

I set **MAXDOP=16** manually for the query (1 DOP per vCPU)

Questions:

1. My best guess is that the IOPS gets severely degraded when the local SSD (presumably with a maximum of 2560 IOPS per vCore ) gets filled up and the severe bottleneck becomes the shared storage instance. Is this accurate? Note that in this case this happens roughly 50% of the time and virtually everything grinds down to a halt.

2. If this is the case, is there a way to calculate the effective minimum IOPS of the shared storage instance?

3. Is there a way to improve the minimum IOPS of the shared storage instance, e.g. by manually over-allocating the storage space? (this would be the standard approach for a lot of other configurations where e.g. you get 3 IOPS per GB)

4. How can I get the actual IOPS figures rather than %?

5. Any other suggestions/tips?

Thanks in advance

Asked by Andrew G

(71 rep)

Mar 5, 2024, 03:39 AM

Last activity: Aug 4, 2025, 02:46 AM

Last activity: Aug 4, 2025, 02:46 AM