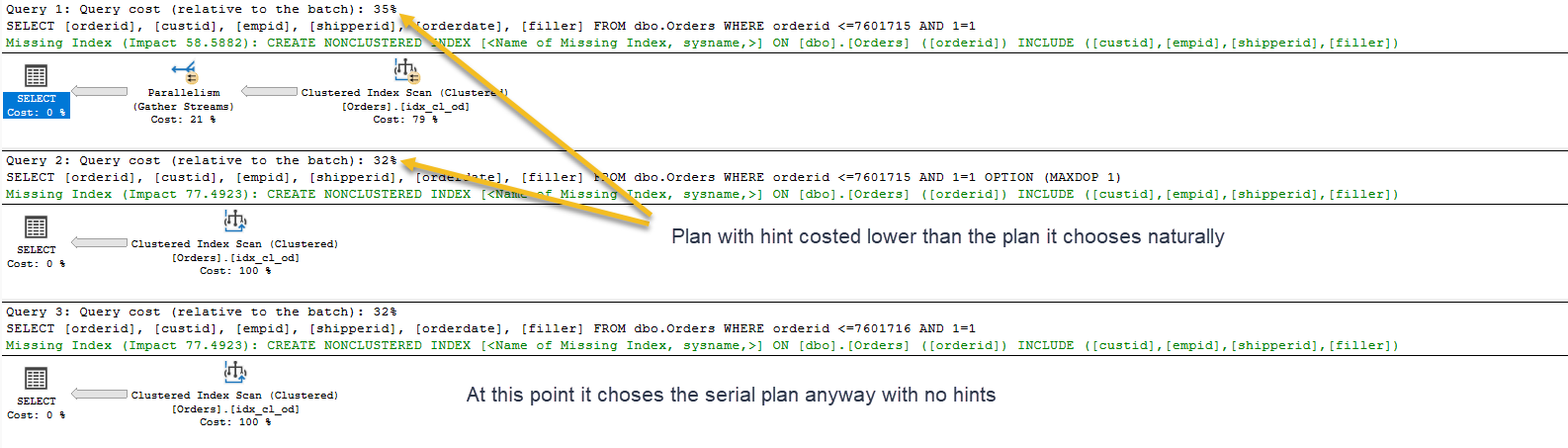

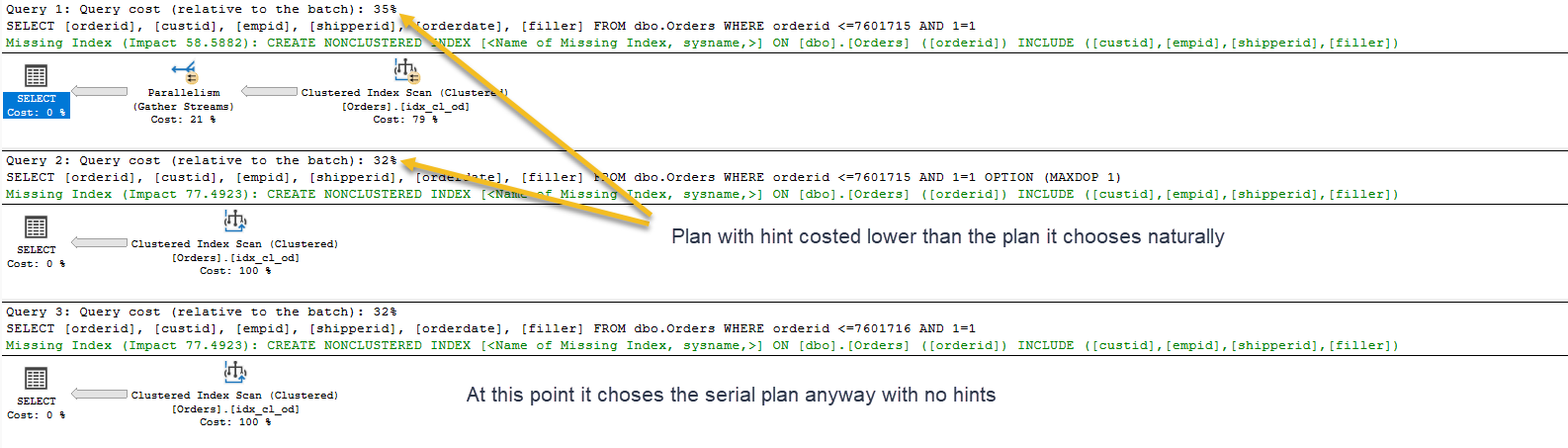

Why is the tipping point between a serial and parallel plan not exactly the point where the serial plan is costed less?

6

votes

1

answer

453

views

For the example data...

/*Quick and dirty generation of some rows of data*/

SELECT value as [orderid],

1 as [custid],

1 as [empid],

1 as [shipperid],

getdate() as [orderdate],

'abcdefgh' as [filler]

INTO dbo.Orders

FROM generate_series(1,10000000)

CREATE CLUSTERED INDEX [idx_cl_od] ON [dbo].[Orders]

(

[orderdate] ASC

)

UPDATE STATISTICS dbo.Orders WITH FULLSCANSELECT [orderid], [custid], [empid], [shipperid], [orderdate], [filler]

FROM dbo.Orders

WHERE orderid <=7601715 AND 1=1 /*Prevent simple parameterisation*/46.8853 regardless of serial or parallel plan.

And the CPU cost of the scan is 11.0002 in the serial plan and 2.75004 in the parallel one

So I was expecting the tipping point between the plans to be when the parallelism operator exceeded 8.25016 (a threshold reached when estimated rows going into it is around 4.5 million). In reality at the point this actually occurs the cost for the gather streams operator is 13.0501 (around 3 million rows higher than I expected).

If SQL Server isn't using overall plan cost as the tipping point what *is* the actual logic?

(XML for estimated plans on pastetheplan )

Asked by Martin Smith

(87941 rep)

Dec 7, 2024, 11:22 AM

Last activity: Dec 7, 2024, 04:09 PM

Last activity: Dec 7, 2024, 04:09 PM