Phenomenon over add column to alter table with 300M records

0

votes

1

answer

68

views

In the context of an existing question that I had asked https://dba.stackexchange.com/questions/345282/performant-way-to-perform-update-over-300m-records-in-mysql . I assumed that the alteration of table schema would possible be trivial for the following:

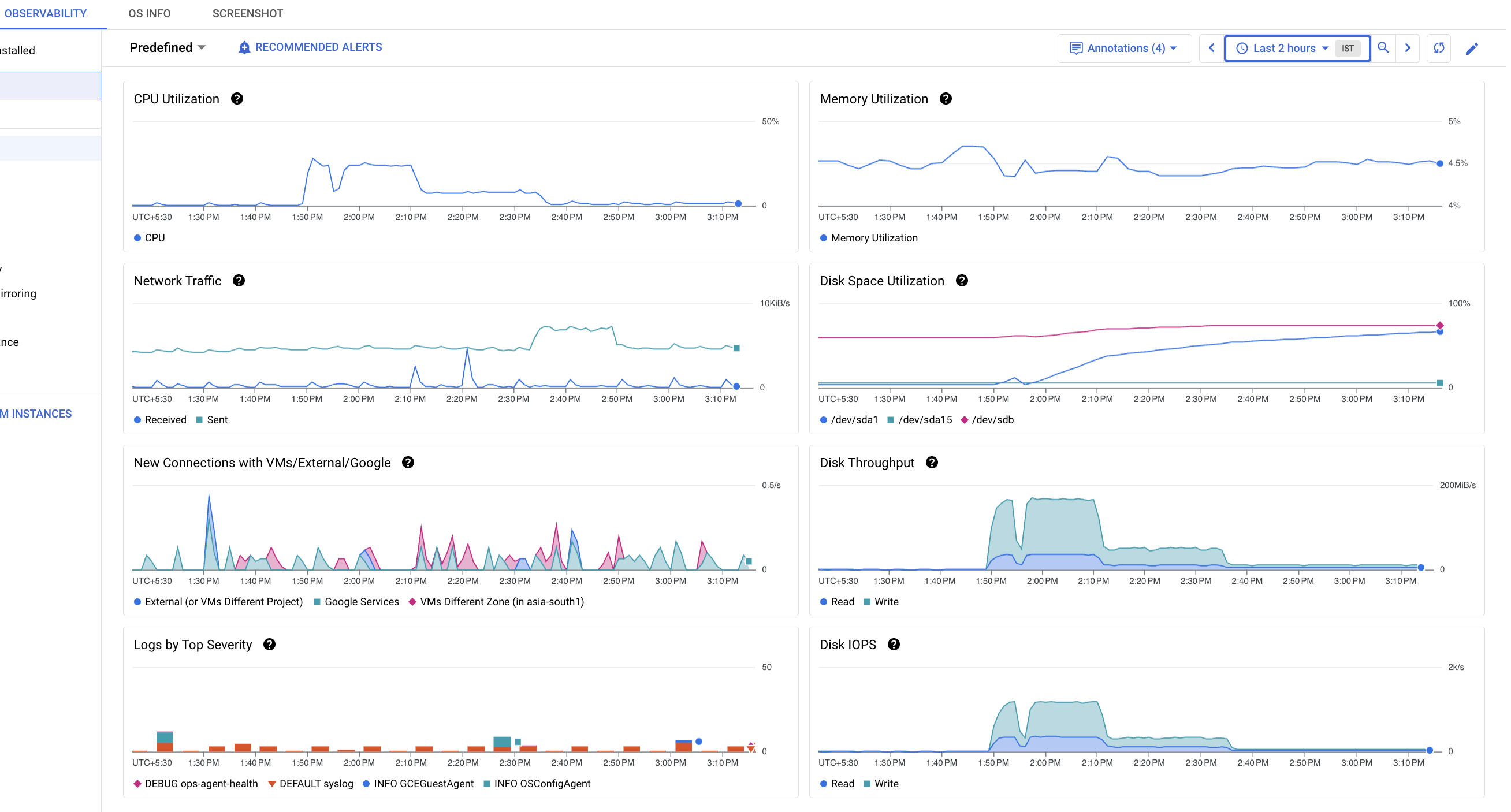

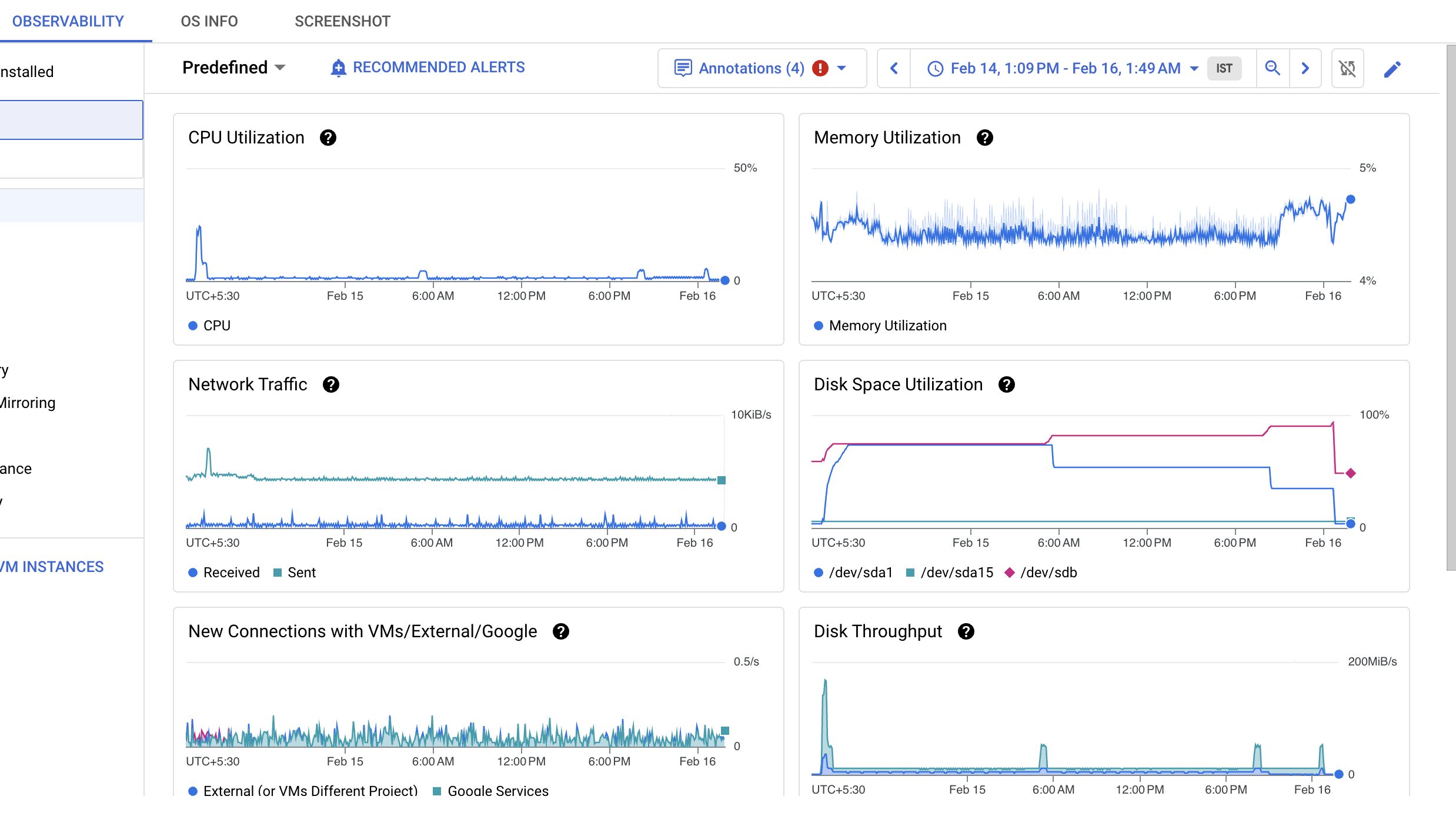

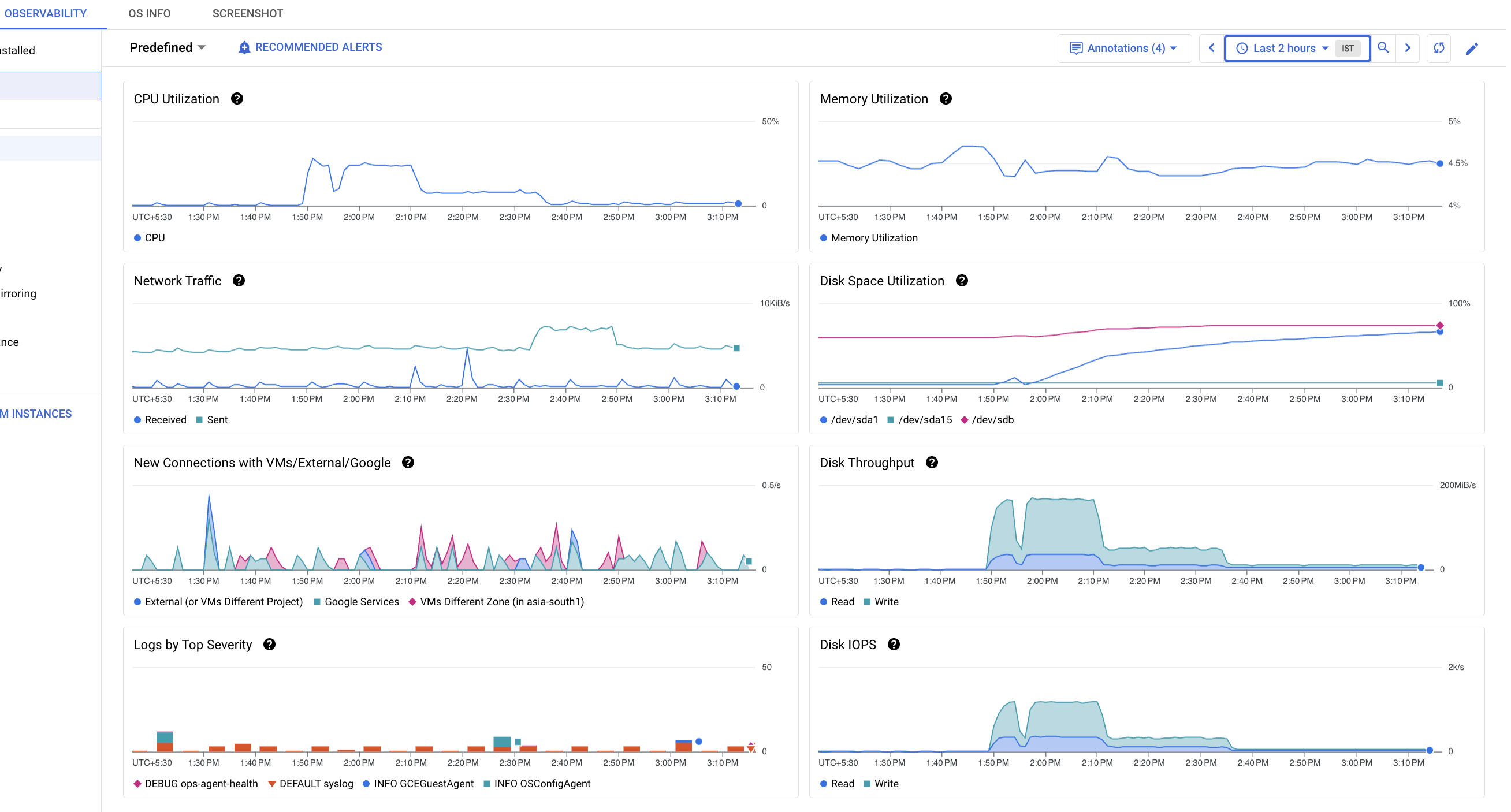

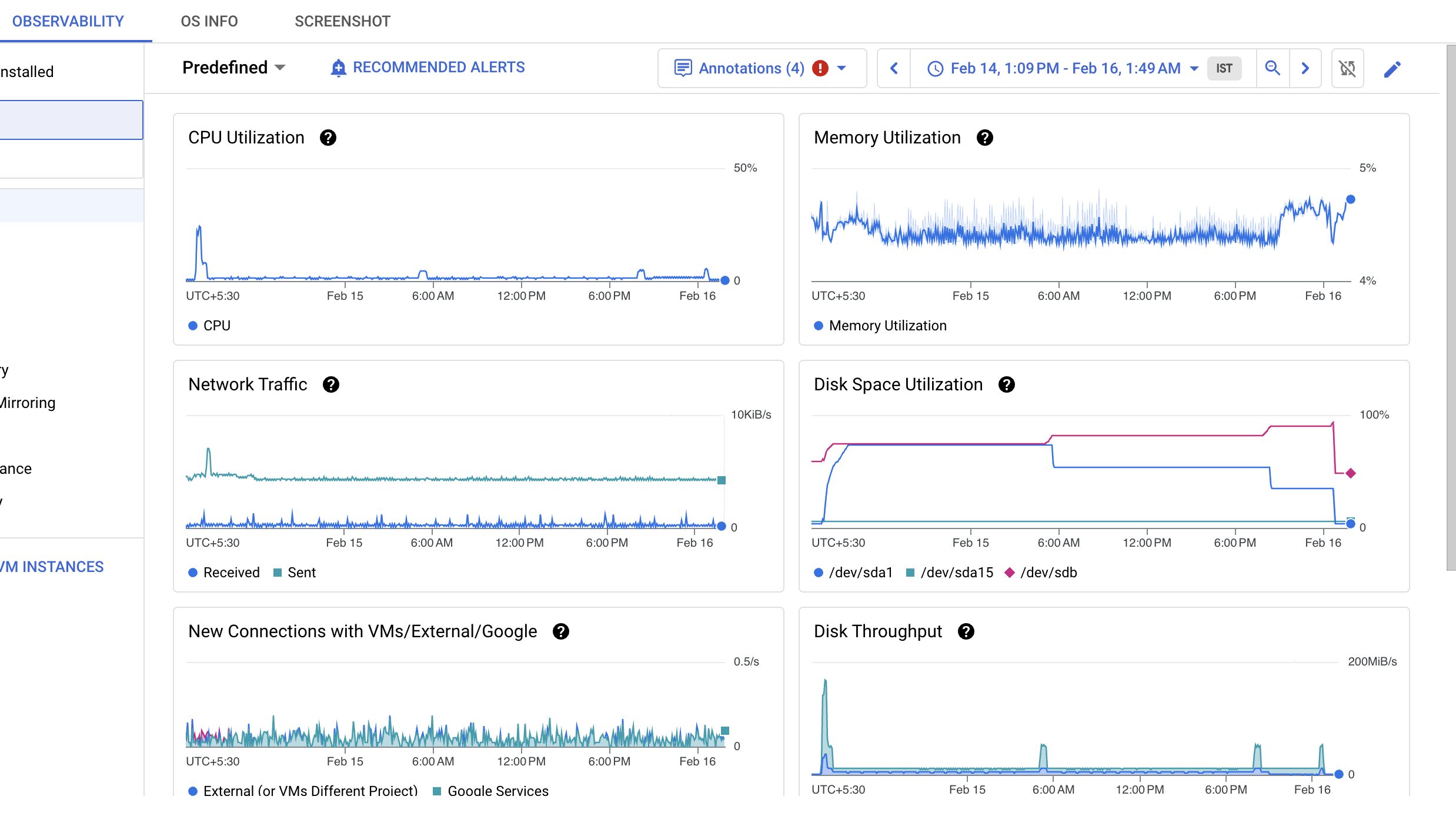

As soon, as the command was triggered I expected that the CPU was consistently be high, but that was evident only for around 20 mins, then there was a sudden dip to 10 % (**Q1**: What underlying event could cause this to occur?) which further went below 5% and remained consistently on this unless the execution of the command completed.

**Q2.** The command execution took **~35hrs**, is there a scope of improving this further?

As soon, as the command was triggered I expected that the CPU was consistently be high, but that was evident only for around 20 mins, then there was a sudden dip to 10 % (**Q1**: What underlying event could cause this to occur?) which further went below 5% and remained consistently on this unless the execution of the command completed.

**Q2.** The command execution took **~35hrs**, is there a scope of improving this further?

**Update**: MYSQL version in use is 5.7.0

**Update**: MYSQL version in use is 5.7.0

interaction | CREATE TABLE interaction (

id varchar(36) NOT NULL,

entity_id varchar(36) DEFAULT NULL,

request_date datetime DEFAULT NULL,

user_id varchar(255) DEFAULT NULL,

sign varchar(1) DEFAULT NULL,

PRIMARY KEY (id),

KEY entity_id_idx (entity_id),

KEY user_id_idx (user_id),

KEY req_date_idx (request_date)

) ENGINE=InnoDB DEFAULT CHARSET=latin1ALTER TABLE interaction

ALGORITHM=INPLACE,

LOCK=NONE,

ADD COLUMN type VARCHAR(32); As soon, as the command was triggered I expected that the CPU was consistently be high, but that was evident only for around 20 mins, then there was a sudden dip to 10 % (**Q1**: What underlying event could cause this to occur?) which further went below 5% and remained consistently on this unless the execution of the command completed.

**Q2.** The command execution took **~35hrs**, is there a scope of improving this further?

As soon, as the command was triggered I expected that the CPU was consistently be high, but that was evident only for around 20 mins, then there was a sudden dip to 10 % (**Q1**: What underlying event could cause this to occur?) which further went below 5% and remained consistently on this unless the execution of the command completed.

**Q2.** The command execution took **~35hrs**, is there a scope of improving this further?

Query OK, 0 rows affected (1 day 10 hours 46 min 13.20 sec)

Records: 0 Duplicates: 0 Warnings: 0 **Update**: MYSQL version in use is 5.7.0

**Update**: MYSQL version in use is 5.7.0

Asked by Naman

(123 rep)

Feb 16, 2025, 05:45 PM

Last activity: Feb 18, 2025, 01:51 AM

Last activity: Feb 18, 2025, 01:51 AM