As a start:

- Shard key is compounded to: group > user > document_name, was only for group

- The cluster is composed of 2 shards

- There is about a billion entries

- Mongo 6.0 is being used

- The database itself seems to work fine

After initiating the admin command for [refineCollectionShardKey](https://www.mongodb.com/docs/manual/reference/command/refineCollectionShardKey/#mongodb-dbcommand-dbcmd.refineCollectionShardKey) , the balancer kept only increasing one shard which resulted in the following status:

'work.FILE': {

shardKey: { 'user.group': 1, 'user.name': 1, document_name: 1 },

unique: false,

balancing: true,

chunkMetadata: [

{ shard: 'RepSet1', nChunks: 26 },

{ shard: 'RepSet2', nChunks: 4248 }

],

With this:

{

'Currently enabled': 'yes',

'Currently running': 'no',

'Failed balancer rounds in last 5 attempts': 0,

'Migration Results for the last 24 hours': {

'13': "Failed with error 'aborted', from RepSet1 to RepSet2",

'4211': 'Success'

}

}

Also:

Shard RepSet1

{

data: '1179.56GiB',

docs: Long("2369155472"),

chunks: 26,

'estimated data per chunk': '45.36GiB',

'estimated docs per chunk': 91121364

}

---

Shard RepSet2

{

data: '1179.78GiB',

docs: 2063496305,

chunks: 4248,

'estimated data per chunk': '284.39MiB',

'estimated docs per chunk': 485757

}

---

Totals

{

data: '2359.35GiB',

docs: 4432651777,

chunks: 4274,

'Shard RepSet1': [

'49.99 % data',

'53.44 % docs in cluster',

'534B avg obj size on shard'

],

'Shard RepSet2': [

'50 % data',

'46.55 % docs in cluster',

'613B avg obj size on shard'

]

}

Example of document:

{

"_id" : ObjectId("5a68e11aedd1713655361323"),

"size" : 524288000,

"file" : {

"archive_time" : "2018-01-24T19:40:10.717646",

"checksum" : null,

"creation_time" : "2017-12-22T14:44:12.643444",

"part" : ".part000000",

"tags" : null,

"size" : 524288000,

"group" : "root",

"filename" : "data/docker_compose/prod_test/data_set/file500",

"owner" : "root",

"inode" : 1310730

},

"document_name" : "file500",

"folder" : "priv/s002/_1",

"user" : {

"group" : "priv",

"rgrp" : "global_n",

"name" : "s002"

}

}

Looking into the logs 12 out of these 13 failed errors where from  My questions:

- Is there an issue? I believe the balancing is wrong considering the big difference between nChunks. If so why? Obviously this seems to be the issue:

chunks: 26,

'estimated data per chunk': '45.36GiB',

'estimated docs per chunk': 91121364

- If the balancer blocked because of the chunks being too big, what option do I have?

- How to confirm that the sharding has been properly done?

- Suggestions?

Thanks

Edit:

To further detail about monotonality, the only thing that could cause this would the config replica set:

database: { _id: 'config', primary: 'config', partitioned: true },

collections: {

'config.system.sessions': {

shardKey: { _id: 1 },

unique: false,

balancing: true,

chunkMetadata: [

{ shard: 'RepSet1', nChunks: 512 },

{ shard: 'RepSet2', nChunks: 512 }

],

My questions:

- Is there an issue? I believe the balancing is wrong considering the big difference between nChunks. If so why? Obviously this seems to be the issue:

chunks: 26,

'estimated data per chunk': '45.36GiB',

'estimated docs per chunk': 91121364

- If the balancer blocked because of the chunks being too big, what option do I have?

- How to confirm that the sharding has been properly done?

- Suggestions?

Thanks

Edit:

To further detail about monotonality, the only thing that could cause this would the config replica set:

database: { _id: 'config', primary: 'config', partitioned: true },

collections: {

'config.system.sessions': {

shardKey: { _id: 1 },

unique: false,

balancing: true,

chunkMetadata: [

{ shard: 'RepSet1', nChunks: 512 },

{ shard: 'RepSet2', nChunks: 512 }

],

moveChunk:

"msg":"Error while doing moveChunk","attr":{"error":"ChunkTooBig: Cannot move chunk: the maximum number of documents for a chunk is 497102, the maximum chunk size is 134217728, average document size is 540. Found 501324 documents in chunk

The highest chunk document count is about 600k which is not so far from the threshold.

The other error was:

"msg":"Error while doing moveChunk","attr":{"error":"Interrupted: Failed to contact recipient shard to monitor data transfer :: caused by :: operation was interrupted"}}

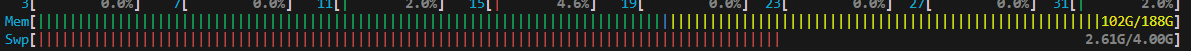

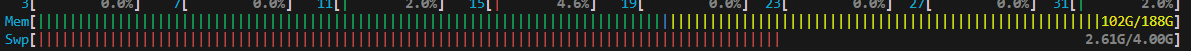

I have a plenty of storage space, enough cores too. Memory might be low:

My questions:

- Is there an issue? I believe the balancing is wrong considering the big difference between nChunks. If so why? Obviously this seems to be the issue:

chunks: 26,

'estimated data per chunk': '45.36GiB',

'estimated docs per chunk': 91121364

- If the balancer blocked because of the chunks being too big, what option do I have?

- How to confirm that the sharding has been properly done?

- Suggestions?

Thanks

Edit:

To further detail about monotonality, the only thing that could cause this would the config replica set:

database: { _id: 'config', primary: 'config', partitioned: true },

collections: {

'config.system.sessions': {

shardKey: { _id: 1 },

unique: false,

balancing: true,

chunkMetadata: [

{ shard: 'RepSet1', nChunks: 512 },

{ shard: 'RepSet2', nChunks: 512 }

],

My questions:

- Is there an issue? I believe the balancing is wrong considering the big difference between nChunks. If so why? Obviously this seems to be the issue:

chunks: 26,

'estimated data per chunk': '45.36GiB',

'estimated docs per chunk': 91121364

- If the balancer blocked because of the chunks being too big, what option do I have?

- How to confirm that the sharding has been properly done?

- Suggestions?

Thanks

Edit:

To further detail about monotonality, the only thing that could cause this would the config replica set:

database: { _id: 'config', primary: 'config', partitioned: true },

collections: {

'config.system.sessions': {

shardKey: { _id: 1 },

unique: false,

balancing: true,

chunkMetadata: [

{ shard: 'RepSet1', nChunks: 512 },

{ shard: 'RepSet2', nChunks: 512 }

],

Asked by Pobe

(101 rep)

Jun 5, 2025, 12:34 PM

Last activity: Jun 7, 2025, 06:43 AM

Last activity: Jun 7, 2025, 06:43 AM