CPU and Load Average Conflict on EC2 server

4

votes

2

answers

2395

views

I am having touble understanding what server resource is causing lag in my Java game server. In the last patch of my game server, I updated my EC2 lamp server from **apache2.2, php5.3, mysql5.5** to **apache2.4, php7.0, mysql5.6**. I also updated my game itself, to include many more instances of monsters that are looped though every game loop - among other things.

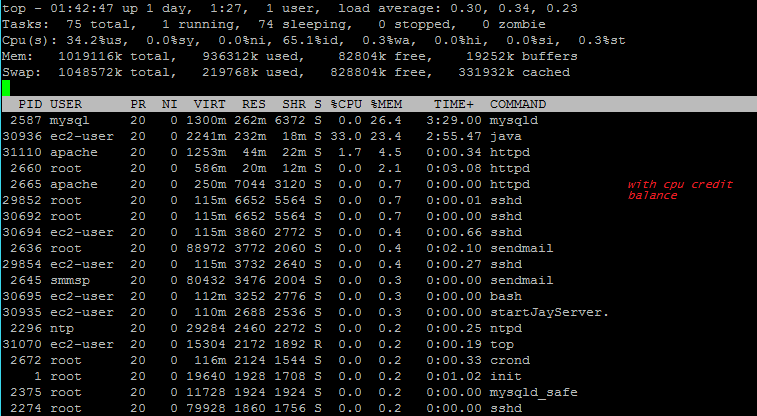

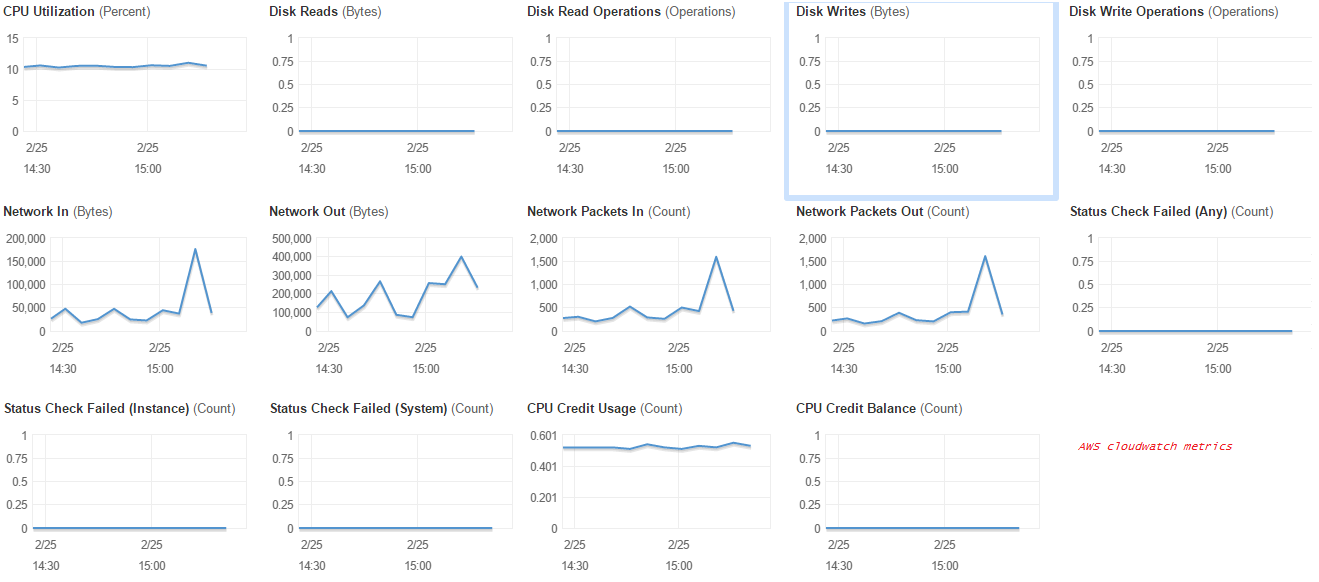

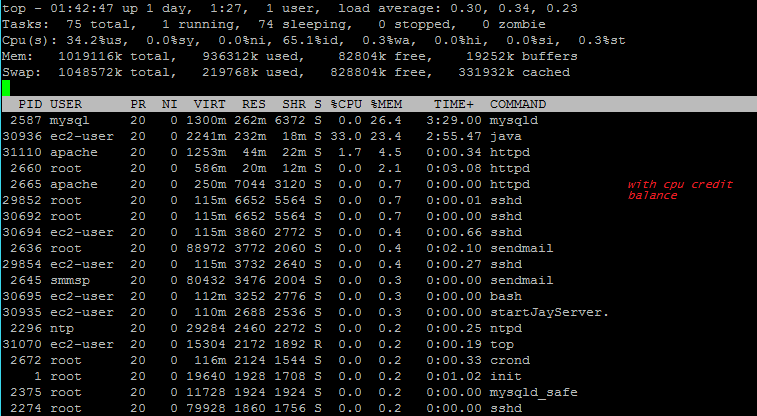

Here is output from right when my game server starts up:

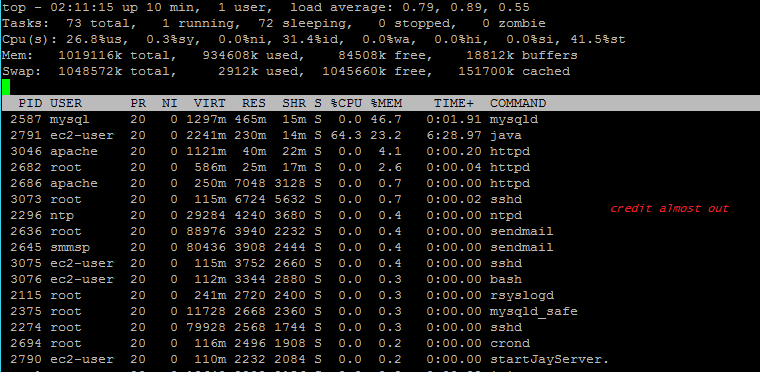

Here is output from a few minutes later:

Here is output from a few minutes later:

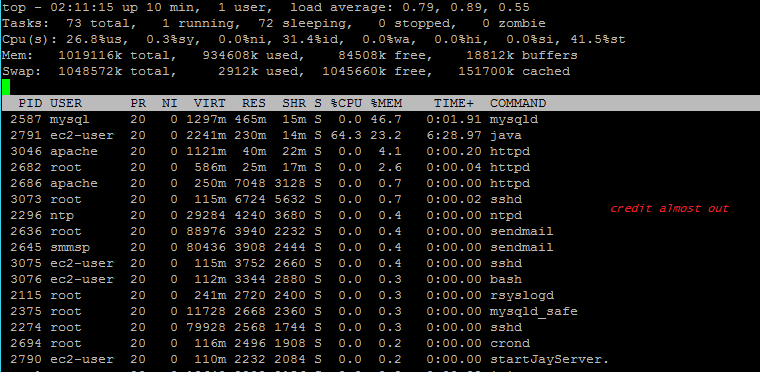

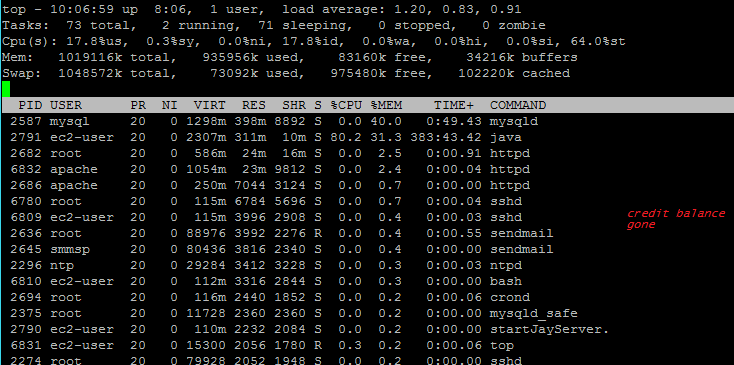

And here is output from the next morning:

And here is output from the next morning:

As you can see in the images the cpu usage of my Java process levels off around 80% in the last screenshot, yet load avg goes to 1.20. I have even seen it go as high as 2.7 this morning. The cpu credits affect how much actual cpu juice my server has so it makes sense that the percentage goes up as my credits balance diminishes, but why at 80% does my server lag?

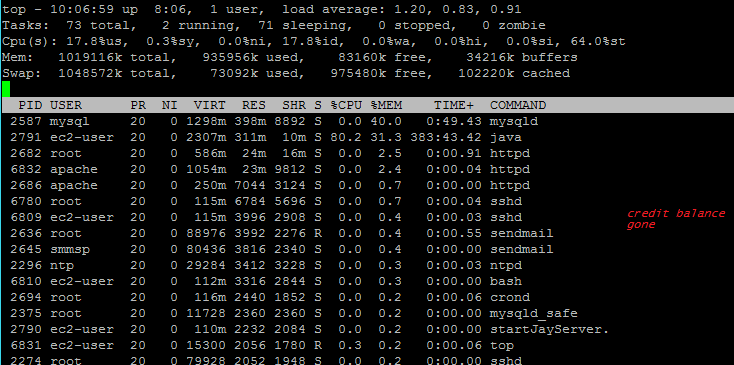

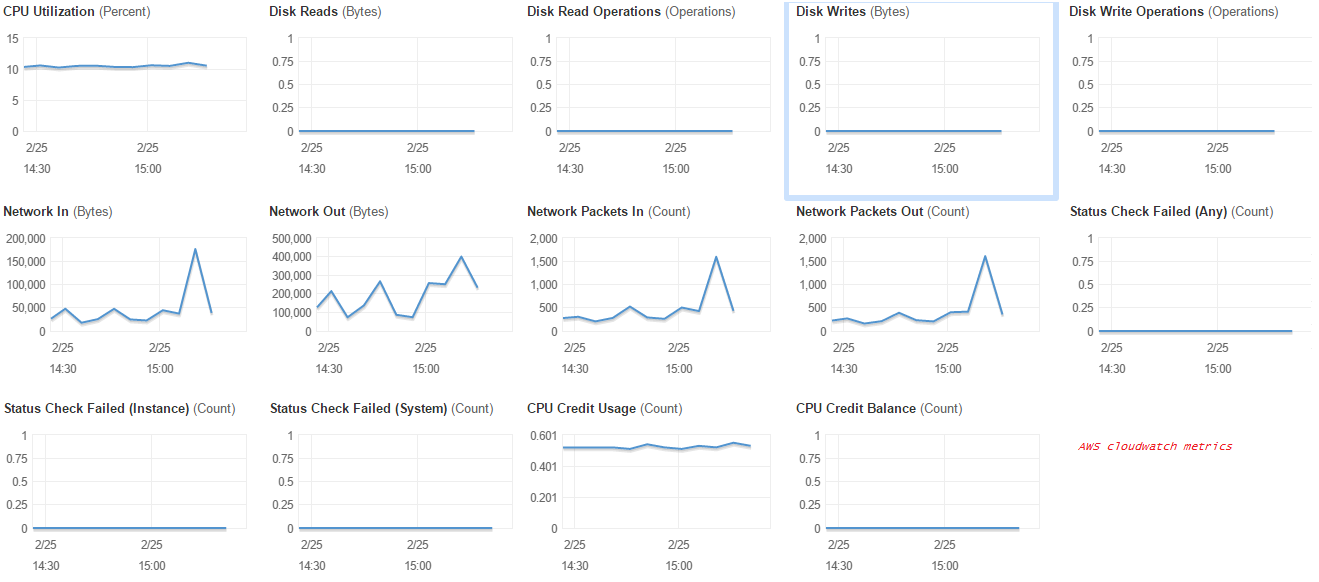

On my Amazon EC2 metrics I see cpu at 10% (which confuses me even more):

As you can see in the images the cpu usage of my Java process levels off around 80% in the last screenshot, yet load avg goes to 1.20. I have even seen it go as high as 2.7 this morning. The cpu credits affect how much actual cpu juice my server has so it makes sense that the percentage goes up as my credits balance diminishes, but why at 80% does my server lag?

On my Amazon EC2 metrics I see cpu at 10% (which confuses me even more):

Right when I start up my server my mmorpg does not lag at all. Then as soon as my cpu credits are depleted it starts to lag. This makes me feel like it is cpu based, but when I see 10% and 80% I don't see why. Any help would be greatly appreciated. I am on a T2.micro instance, so it has 1 vCPU. If I go up to the next instance it nearly doubles in price, and stays at same vCPU of 1, but with more credits.

Long story short, I want to understand fully what I going on as the 80% number is throwing me. I don't just want to throw money at the problem.

Right when I start up my server my mmorpg does not lag at all. Then as soon as my cpu credits are depleted it starts to lag. This makes me feel like it is cpu based, but when I see 10% and 80% I don't see why. Any help would be greatly appreciated. I am on a T2.micro instance, so it has 1 vCPU. If I go up to the next instance it nearly doubles in price, and stays at same vCPU of 1, but with more credits.

Long story short, I want to understand fully what I going on as the 80% number is throwing me. I don't just want to throw money at the problem.

Here is output from a few minutes later:

Here is output from a few minutes later:

And here is output from the next morning:

And here is output from the next morning:

As you can see in the images the cpu usage of my Java process levels off around 80% in the last screenshot, yet load avg goes to 1.20. I have even seen it go as high as 2.7 this morning. The cpu credits affect how much actual cpu juice my server has so it makes sense that the percentage goes up as my credits balance diminishes, but why at 80% does my server lag?

On my Amazon EC2 metrics I see cpu at 10% (which confuses me even more):

As you can see in the images the cpu usage of my Java process levels off around 80% in the last screenshot, yet load avg goes to 1.20. I have even seen it go as high as 2.7 this morning. The cpu credits affect how much actual cpu juice my server has so it makes sense that the percentage goes up as my credits balance diminishes, but why at 80% does my server lag?

On my Amazon EC2 metrics I see cpu at 10% (which confuses me even more):

Right when I start up my server my mmorpg does not lag at all. Then as soon as my cpu credits are depleted it starts to lag. This makes me feel like it is cpu based, but when I see 10% and 80% I don't see why. Any help would be greatly appreciated. I am on a T2.micro instance, so it has 1 vCPU. If I go up to the next instance it nearly doubles in price, and stays at same vCPU of 1, but with more credits.

Long story short, I want to understand fully what I going on as the 80% number is throwing me. I don't just want to throw money at the problem.

Right when I start up my server my mmorpg does not lag at all. Then as soon as my cpu credits are depleted it starts to lag. This makes me feel like it is cpu based, but when I see 10% and 80% I don't see why. Any help would be greatly appreciated. I am on a T2.micro instance, so it has 1 vCPU. If I go up to the next instance it nearly doubles in price, and stays at same vCPU of 1, but with more credits.

Long story short, I want to understand fully what I going on as the 80% number is throwing me. I don't just want to throw money at the problem.

Asked by KisnardOnline

(143 rep)

Feb 25, 2017, 03:46 PM

Last activity: Aug 9, 2022, 06:10 AM

Last activity: Aug 9, 2022, 06:10 AM