**The problem**: X-server serves fonts at a fixed resolution of **100dpi**, rather than the current window system resolution ( I thought this regression was a consequence of people gradually cutting out obsolete subsystems from X11, but in _Debian Woody_, released in 2002 and having a 2.2 kernel, I saw exactly the same thing:

I thought this regression was a consequence of people gradually cutting out obsolete subsystems from X11, but in _Debian Woody_, released in 2002 and having a 2.2 kernel, I saw exactly the same thing:

The only difference is that _Debian Woody_ renders fonts in a "cleaner" manner, apparently, applying hinting on the server side, before sending bitmaps over the network.

So this is not a regression. The problem has always been there and equally affects all vector font types (_TrueType_, _OpenType_, _Type 1_).

**Now, the question**. Is there a way, without hard-coding window system resolution into user settings for each individual resource, to get by with less pain than recommended by the author of the [_Sharing Xresources between systems_](https://jnrowe.github.io/articles/tips/Sharing_Xresources_between_systems.html) article?

Is it possible to solve the problem by changing the global configuration of the X server itself or the libraries it relies on (

The only difference is that _Debian Woody_ renders fonts in a "cleaner" manner, apparently, applying hinting on the server side, before sending bitmaps over the network.

So this is not a regression. The problem has always been there and equally affects all vector font types (_TrueType_, _OpenType_, _Type 1_).

**Now, the question**. Is there a way, without hard-coding window system resolution into user settings for each individual resource, to get by with less pain than recommended by the author of the [_Sharing Xresources between systems_](https://jnrowe.github.io/articles/tips/Sharing_Xresources_between_systems.html) article?

Is it possible to solve the problem by changing the global configuration of the X server itself or the libraries it relies on (

xdpyinfo | grep -F resolution).

**A bit of theory**. There are legacy server-side fonts which are sent to X clients over the network (via TCP or UNIX socket) either by the X server itself, or by a separate _X Font Server_ (single or multiple). Unlike the usual client-side fonts (Xft, GTK 2+, Qt 2+), the "server" backend (also called the _core X font_ backend) does not support anti-aliasing, but supports network transparency (that is, bitmaps, without any alpha channel, are sent over the network). At the application level, server-side fonts are specified not as an [XftFontStruct](https://keithp.com/~keithp/talks/xtc2001/paper/) (which most often translates into the familiar DejaVu Sans Mono:size=12:antialias=true), but as an [_XLFD_](https://wiki.archlinux.org/index.php/X_Logical_Font_Description) . If we are talking about a local machine, then the same font file can be registered in both font backends at once and be available to both modern GTK and Qt-based applications, and legacy ones (Xt, Athena, Motif, GTK 1.2, Qt 1.x).

Historically, there were raster server-side fonts (*.pcf), and a raster has a resolution of its own (not necessarily the same as the window system resolution). Therefore, XLFD has fields such as RESOLUTION_X and RESOLUTION_Y. For a raster font not to look ugly when rendered onto the screen and still have the requested rasterized glyph size (PIXEL_SIZE), the raster resolution must be close to the screen resolution, therefore raster fonts were usually shipped with native resolutions of **75dpi** and **100dpi** (that's why we still have directories such as /usr/share/fonts/X11/75dpi and /usr/share/fonts/X11/100dpi). So, the below lines represent the same 12 pt font

-bitstream-charter-bold-r-normal--12-120-75-75-p-75-iso8859-1

-bitstream-charter-bold-r-normal--17-120-100-100-p-107-iso8859-10) or even asterisks (*) in the RESOLUTION_X and RESOLUTION_Y fields, and, in theory, my X server should give me exactly the font requested. This is directly stated in the _Arch Linux Wiki_ article at the link above:

> Scalable fonts were designed to be resized. A scalable font name, as shown in the example below, has zeroes in the pixel and point size fields, the two resolution fields, and the average width field.

>

> ...

>

> To specify a scalable font at a particular size you only need to provide a value for the POINT_SIZE field, the other size related values can remain at zero. The POINT_SIZE value is in tenths of a point, so the entered value must be the desired point size multiplied by ten.

So, either of the following two queries should return a **12pt** Courier New font at the window system resolution:

-monotype-courier new-medium-r-normal--*-120-*-*-m-*-iso10646-1

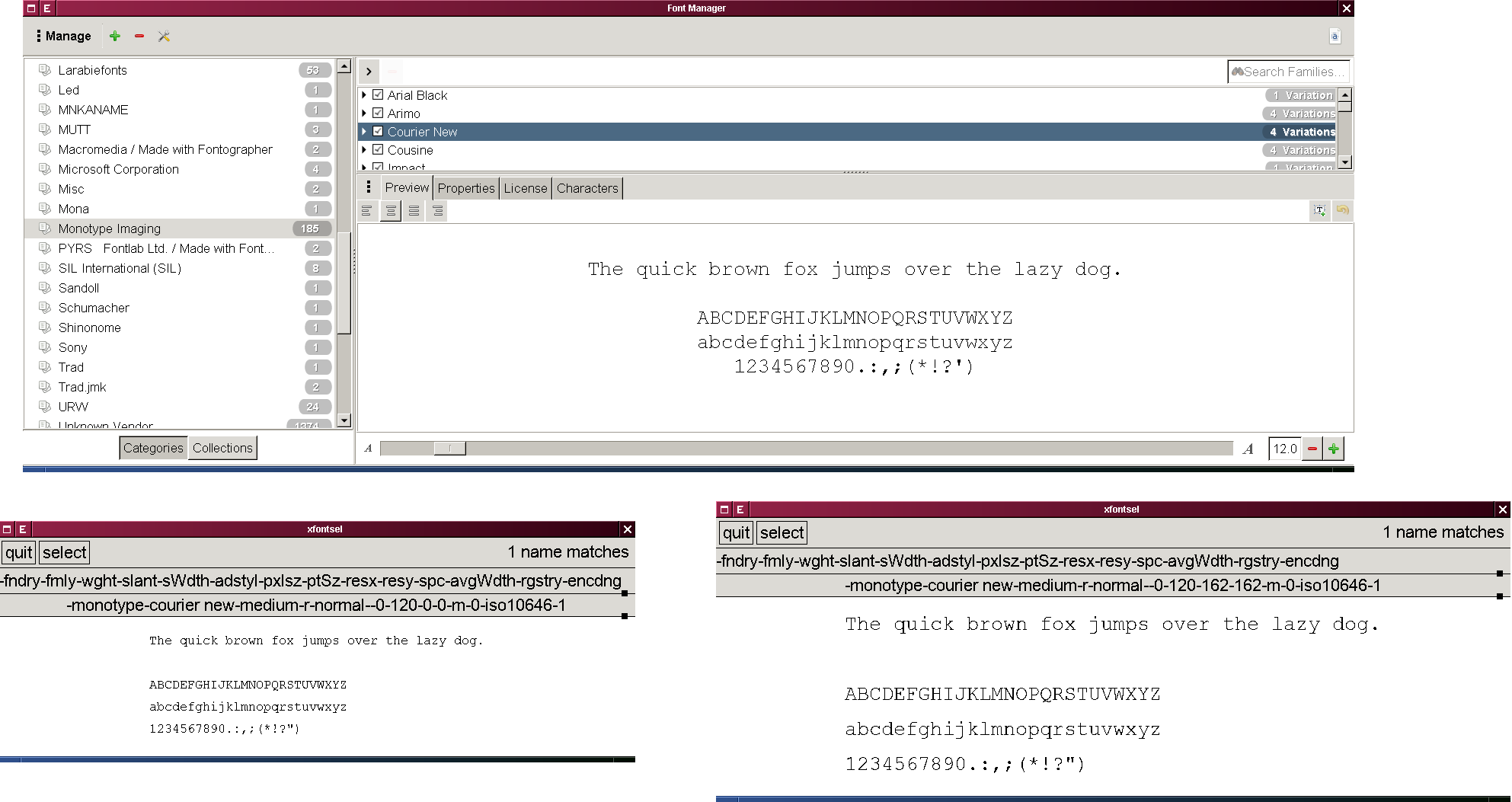

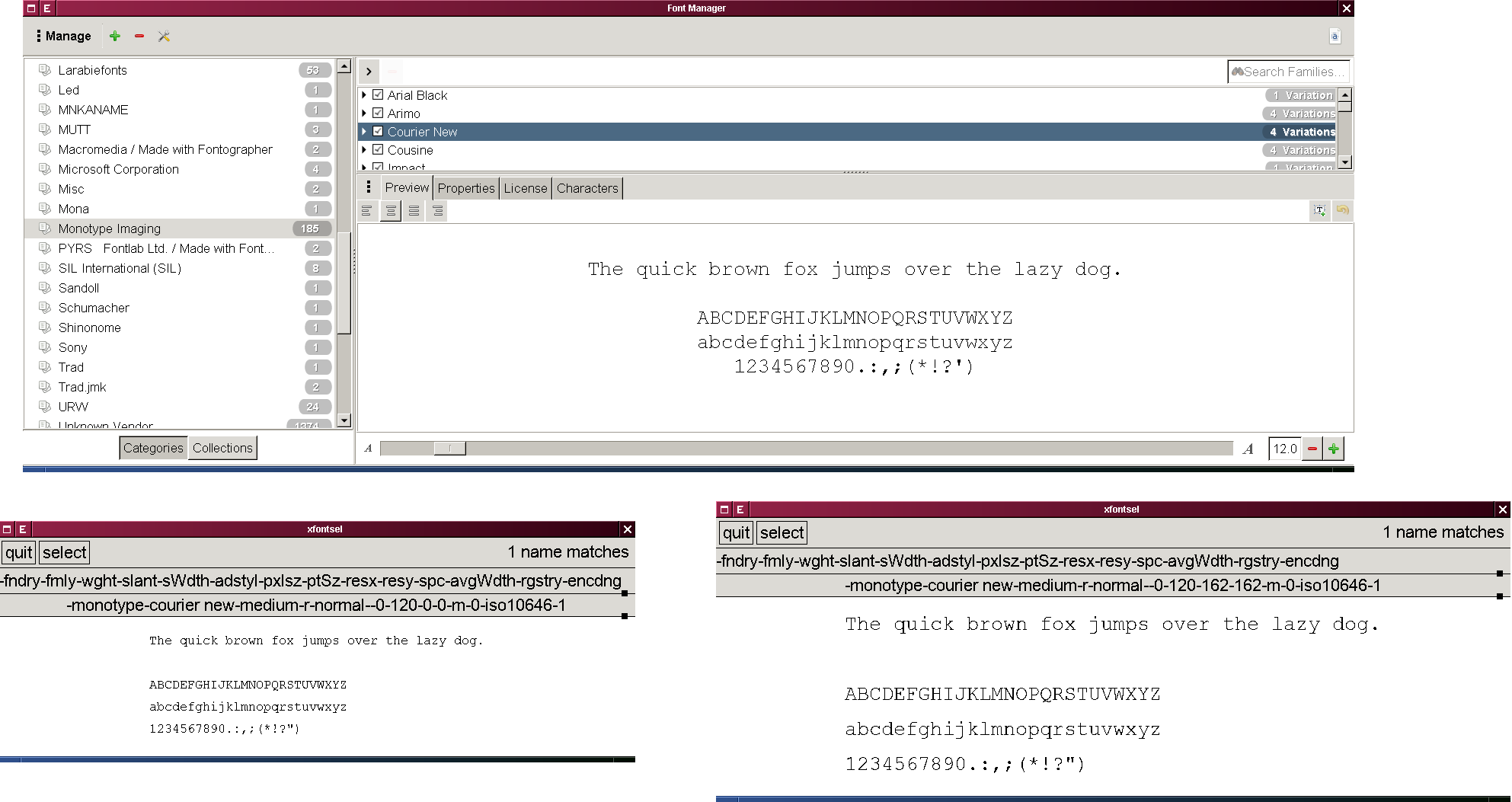

-monotype-courier new-medium-r-normal--0-120-0-0-m-0-iso10646-1RESOLUTION_X and RESOLUTION_Y fields to **162** (and no one in his right mind would do so -- it would require rewriting dozens of Xresources lines every time one changes his monitor), then X server defaults to rendering the font at **100dpi** instead of **162**. The difference between **17** and **27** pixels (the factor of 1.62 = 162 / 100) is quite noticeable. Here's an example for a modern _Debian 10_ box:

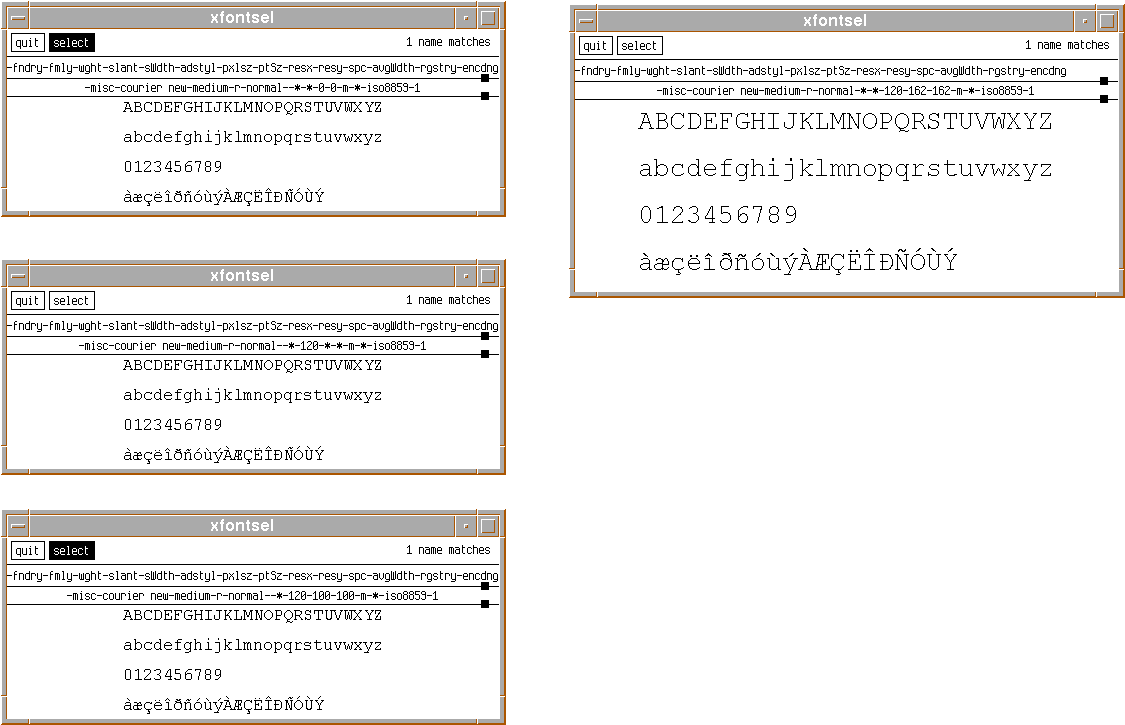

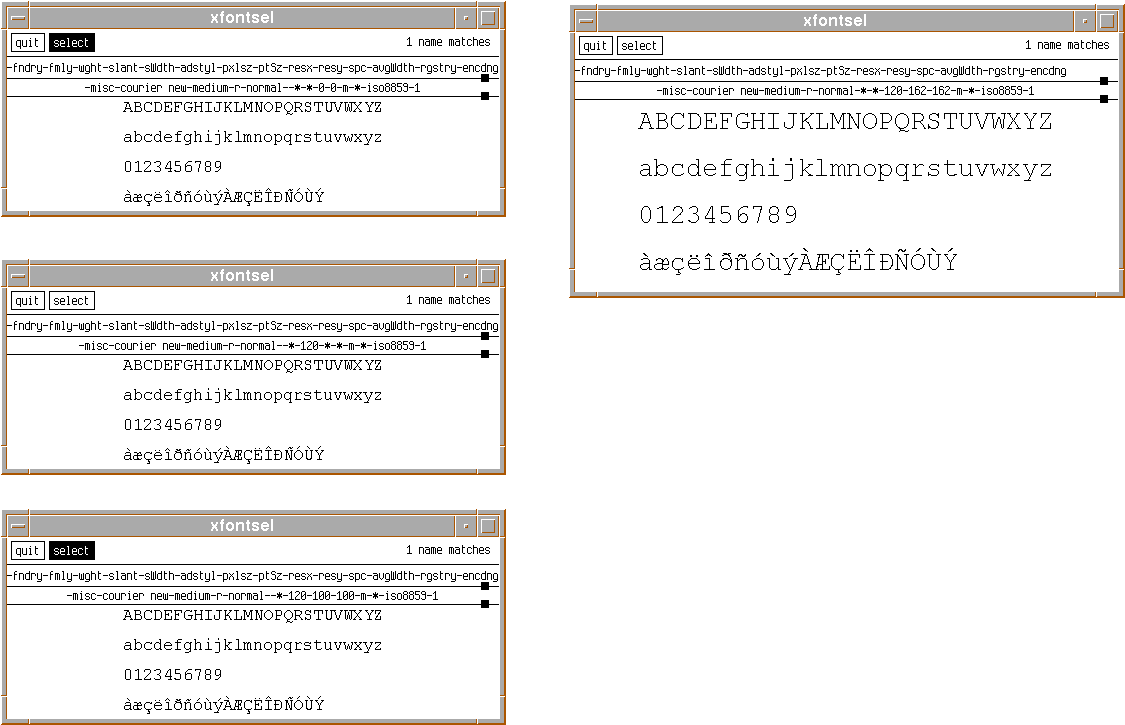

I thought this regression was a consequence of people gradually cutting out obsolete subsystems from X11, but in _Debian Woody_, released in 2002 and having a 2.2 kernel, I saw exactly the same thing:

I thought this regression was a consequence of people gradually cutting out obsolete subsystems from X11, but in _Debian Woody_, released in 2002 and having a 2.2 kernel, I saw exactly the same thing:

The only difference is that _Debian Woody_ renders fonts in a "cleaner" manner, apparently, applying hinting on the server side, before sending bitmaps over the network.

So this is not a regression. The problem has always been there and equally affects all vector font types (_TrueType_, _OpenType_, _Type 1_).

**Now, the question**. Is there a way, without hard-coding window system resolution into user settings for each individual resource, to get by with less pain than recommended by the author of the [_Sharing Xresources between systems_](https://jnrowe.github.io/articles/tips/Sharing_Xresources_between_systems.html) article?

Is it possible to solve the problem by changing the global configuration of the X server itself or the libraries it relies on (

The only difference is that _Debian Woody_ renders fonts in a "cleaner" manner, apparently, applying hinting on the server side, before sending bitmaps over the network.

So this is not a regression. The problem has always been there and equally affects all vector font types (_TrueType_, _OpenType_, _Type 1_).

**Now, the question**. Is there a way, without hard-coding window system resolution into user settings for each individual resource, to get by with less pain than recommended by the author of the [_Sharing Xresources between systems_](https://jnrowe.github.io/articles/tips/Sharing_Xresources_between_systems.html) article?

Is it possible to solve the problem by changing the global configuration of the X server itself or the libraries it relies on (libfreetype, libxfont)?

Asked by Bass

(281 rep)

Feb 3, 2021, 11:30 AM

Last activity: Nov 1, 2024, 08:51 PM

Last activity: Nov 1, 2024, 08:51 PM