measuring HDR IPoIB, and anything 10GbE or higher, copy speed

1

vote

1

answer

525

views

In dealing with large data files, i.e. greater than 10GB, I am working with IPoIB {IP over infiniband}

I need something better than the traditional 1gbps wired network speed.

Background:

- on a 1gbps wired network, an SSH *secure copy* speed or samba file transfer between my linux servers as well as windows pc's is **112 MB/sec**. **note:** megabyte per second. We want faster.

- have mellanox HDR inifiniband switch and adapter cards in my linux servers;

- HDR is 100 gbps

- I am running RHEL 7.9 x86-64 and have the latest mellanox iso drivers installed

- In RHEL network manager I have the

ib0 interface working with a static IP address as **Datagram** and my infiniband network between the few RHEL linux servers at this point is working *fine*.... *it is working*.

- In doing a secure copy as a speed test I get a solid ~260 MB/sec, even if I have 3 servers bombarding the same destination server with a 30 GB tar file.

- my thought is a 1gbps wired network is 1000 megabits per second {divided by 8 bits in a byte} is 125 MB/sec maximum and I get 112 MB/sec (89%) so I am cool with that.

- going from 1gbps to 100gbs infiniband I hoped to see close to a corresponding 11200 MB/s *secure copy* speed but I only got 260 MB/sec; 260 / 11200 = 2%... which is abysmal?

Can someone let me know what kind of speeds are realistic in this scenario, given a 100 gbps HDR infiniband? Doing ethtool ib0 reports the link at 100,000, and that along with my one scp test is all I know at this point.

Is there some limit of scp and ssh manifesting itself since I have a 100 gbps now?

What is a better *speed test* I can do in this scenario? The goal is eventually to get the maximum file transfer speed between multiple servers on a simple LAN, using scp and eventually nfs. *I am aware of nfs over rdma but I am taking baby steps*.

I would also be interested in seeing any reports (answers) for anyone doing a **wired** network that is 10GbE or better, which would include all those slower infinibands like QDR, EDR, FDR, and so on for what transfer speeds should be.

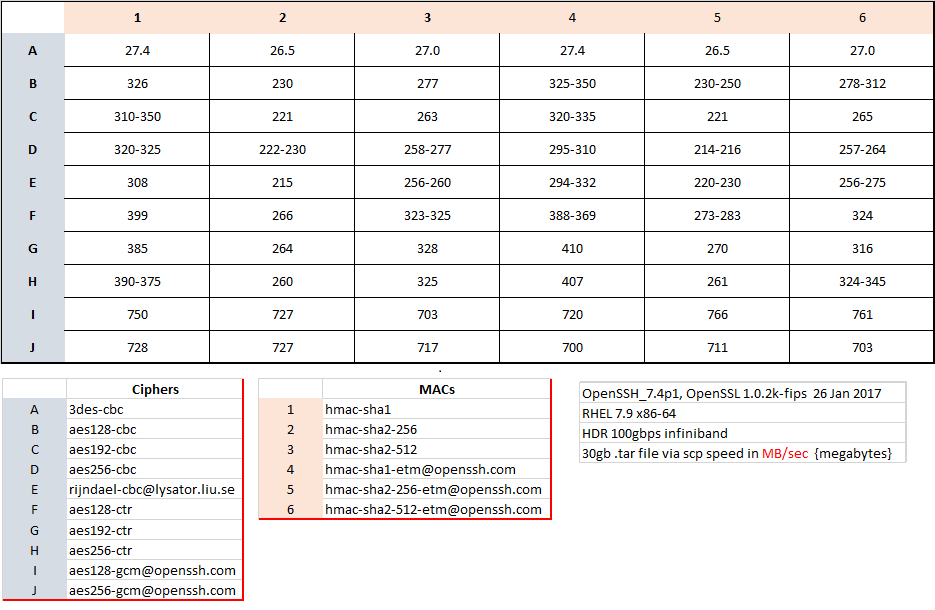

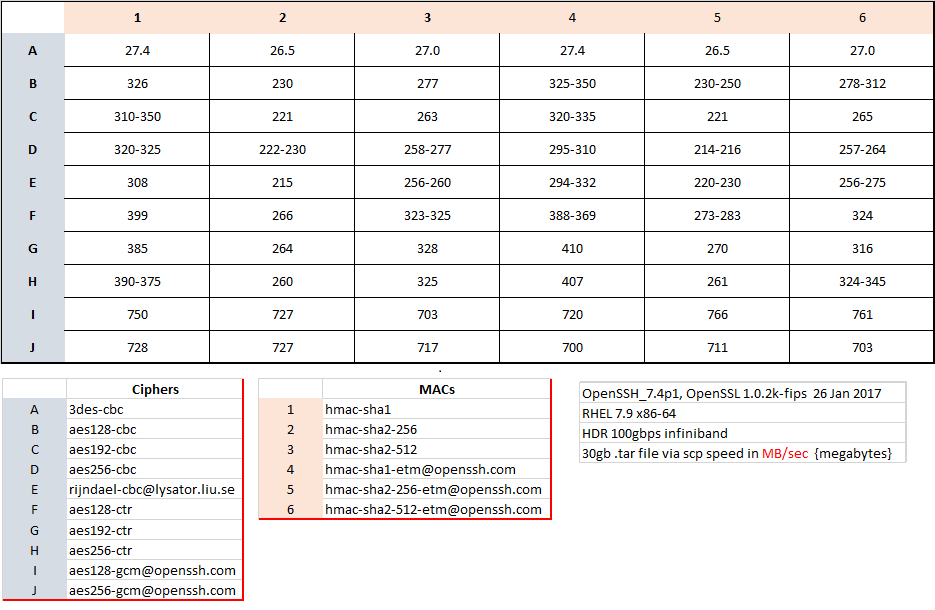

**update:** i did the following test. So it seems while I have 100 gbps network speed the transfer is limited by how fast the cpu can work on the chosen cipher (and maybe hmac). So one would not simply get an expensive infiniband setup if all you are going to do is scp. *I only used scp here because of its convenience in reporting transfer speed in MB/sec.*

I was the only one on both servers, in my server room. Both servers were identical in hardware, and are 4 socket servers having intel 8628 cpu's, freq = 2.9ghz.

**note:** on a 1 gbps traditional wired network using aes-###ctr and hmac-sha2-aes### I get a solid 112 MB/sec scp speed all day long.

Asked by ron

(8647 rep)

Mar 1, 2021, 04:07 PM

Last activity: Dec 29, 2021, 07:42 PM

Last activity: Dec 29, 2021, 07:42 PM