Does the worst PCIe MSI interrupt latency jitter over 100us normal?

0

votes

0

answers

620

views

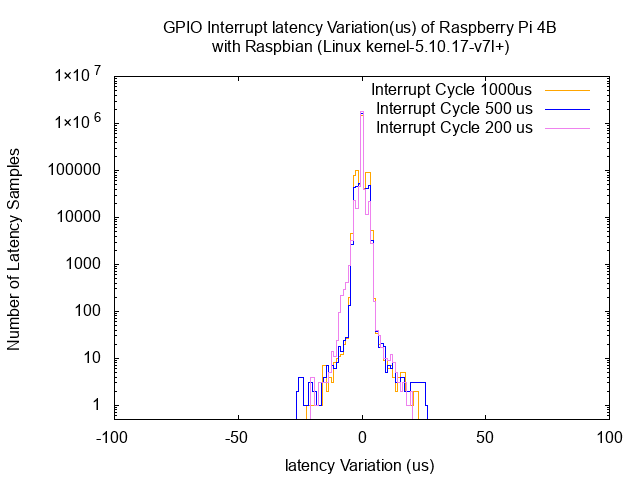

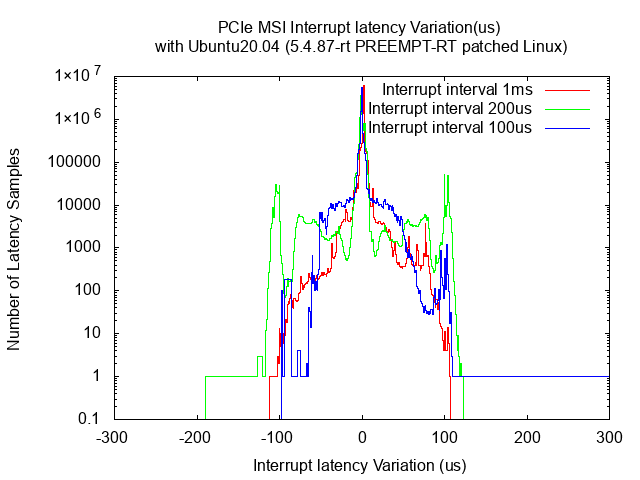

I try to figure out the values of interrupt jitter in Linux, especially in the worst cases.

Two testbeds are considered, one is Raspberry Pi 4B, the other is a high-end PC with an intel i9 CPU and ASUS motherboard.

My Question are:

1 Why the PCIe MSI interrupts have larger latency jitters than the GPIO interrupt's ?

2 Is it normal that the morden PCIe MSI interrupt have about 100us latency variation under the worst condition (Is there anything I missed)? How can I minimize such variations in latency (jitter).

My Question are:

1 Why the PCIe MSI interrupts have larger latency jitters than the GPIO interrupt's ?

2 Is it normal that the morden PCIe MSI interrupt have about 100us latency variation under the worst condition (Is there anything I missed)? How can I minimize such variations in latency (jitter).

+-+ +-+ +-+ Raspberry Pi 4B

| | | | | | -------------+

--+ +-+ +-+ +- 1. GPIO | +--------------+

Signal Generator | | |

+----> +-----+ | Linux |

| CPU | | ------------ |

+----> +-----+ | Kernel |

| | | | |

+-+--+-+ 2. PCIe MSI | +--------------+

--+ FPGA +-- ------------+

+-+--+-+ high-end PC (i9-9900K)

| |irq_handler function of the driver code in the high-end PC

// IRQ handler

static irqreturn_t fPCI_event_isr (int irq, void *cookie)

{

struct timespec64 tsc_now = {.tsc_high = 0; .tsc_low = 0};

struct fPCI_dev *drv_data = (struct fPCI_dev *) cookie;

int nwritten;

int fifo_size;

// get TSC timestamp

get_tsc_cpuid((tsc_now.tsc_high), (tsc_now.tsc_low));

spin_lock(&(drv_data->spin_lock));

nwritten = fpci_fifo_write(drv_data->fifo, &tsc_now, 1);

spin_unlock(&(drv_data->spin_lock));

if (nwritten != 1)

printk(KERN_INFO, "fPCI_event_isr: fifo overflow!\n");

fifo_size = fpci_fifo_size(drv_data->fifo);

if (fifo_size > FIFO_SIZE/2)

wake_up(&(drv_data->waitqueue));

return IRQ_HANDLED;

}

In the code of IRQ handlers, first, the current time is stored in a FIFO. Then, a user-space thread reads time data from the FIFO, and counts the jitters of the interrupt.

The results are showed in following two figures.

My Question are:

1 Why the PCIe MSI interrupts have larger latency jitters than the GPIO interrupt's ?

2 Is it normal that the morden PCIe MSI interrupt have about 100us latency variation under the worst condition (Is there anything I missed)? How can I minimize such variations in latency (jitter).

My Question are:

1 Why the PCIe MSI interrupts have larger latency jitters than the GPIO interrupt's ?

2 Is it normal that the morden PCIe MSI interrupt have about 100us latency variation under the worst condition (Is there anything I missed)? How can I minimize such variations in latency (jitter).

Asked by foool

(111 rep)

Sep 14, 2021, 08:08 AM