kcompacd0 using 100% CPU with VMware Workstation 16

11

votes

5

answers

12766

views

Same as posted in redhat bugzilla -- [kcompacd0 using 100% cpu](https://bugzilla.redhat.com/show_bug.cgi?id=1694305) , which was closed for  - When it does, it'll normally last for several minutes. Then kicks in again after a minutes or two.

- _"kcompactd0 goes away only with drop_caches. when it goes 100%, the vmware virtual machine guest is completely unresponsive (windows 10 ltsc vm)"_ So I only tried drop_caches once, and have confirmed the behavior.

As requested upstream, here is more info:

- When it does, it'll normally last for several minutes. Then kicks in again after a minutes or two.

- _"kcompactd0 goes away only with drop_caches. when it goes 100%, the vmware virtual machine guest is completely unresponsive (windows 10 ltsc vm)"_ So I only tried drop_caches once, and have confirmed the behavior.

As requested upstream, here is more info:

INSUFFICIENT_DATA.

Also same as

- https://unix.stackexchange.com/questions/458893/vmware-on-linux-host-causes-regular-freezes

- https://unix.stackexchange.com/questions/161858/arch-linux-becomes-unresponsive-from-khugepaged/

Reopening because the solution there doesn't work for me.

Here is my situation:

- Ubuntu **21.10** host and Windows **10** Enterprise client, with VMware Workstation 16 v**16.2.0** build-18760230

- I'm not doing anything fancy or heavy load, just after a day of regular Windows 10 usage (of light load), things start to get wild.

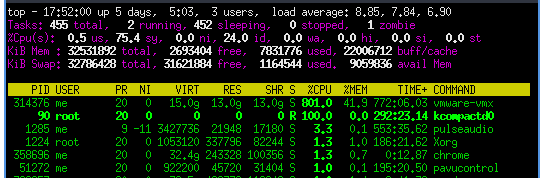

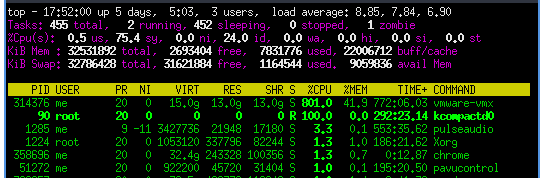

- The process kcompactd0 is constantly using 100% cpu on one core, and vmware-vmx using 100% cpu on eight cores.

- When it does, it'll normally last for several minutes. Then kicks in again after a minutes or two.

- _"kcompactd0 goes away only with drop_caches. when it goes 100%, the vmware virtual machine guest is completely unresponsive (windows 10 ltsc vm)"_ So I only tried drop_caches once, and have confirmed the behavior.

As requested upstream, here is more info:

- When it does, it'll normally last for several minutes. Then kicks in again after a minutes or two.

- _"kcompactd0 goes away only with drop_caches. when it goes 100%, the vmware virtual machine guest is completely unresponsive (windows 10 ltsc vm)"_ So I only tried drop_caches once, and have confirmed the behavior.

As requested upstream, here is more info:

$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 21.10

Release: 21.10

Codename: impish

$ grep -r . /sys/kernel/mm/transparent_hugepage/*

/sys/kernel/mm/transparent_hugepage/defrag:always defer defer+madvise [madvise] never

/sys/kernel/mm/transparent_hugepage/enabled:always [madvise] never

/sys/kernel/mm/transparent_hugepage/hpage_pmd_size:2097152

/sys/kernel/mm/transparent_hugepage/khugepaged/defrag:1

/sys/kernel/mm/transparent_hugepage/khugepaged/max_ptes_shared:256

/sys/kernel/mm/transparent_hugepage/khugepaged/scan_sleep_millisecs:10000

/sys/kernel/mm/transparent_hugepage/khugepaged/max_ptes_none:511

/sys/kernel/mm/transparent_hugepage/khugepaged/pages_to_scan:4096

/sys/kernel/mm/transparent_hugepage/khugepaged/max_ptes_swap:64

/sys/kernel/mm/transparent_hugepage/khugepaged/alloc_sleep_millisecs:60000

/sys/kernel/mm/transparent_hugepage/khugepaged/pages_collapsed:0

/sys/kernel/mm/transparent_hugepage/khugepaged/full_scans:19

/sys/kernel/mm/transparent_hugepage/shmem_enabled:always within_size advise [never] deny force

/sys/kernel/mm/transparent_hugepage/use_zero_page:1

$ cat /proc/90/stack | wc

0 0 0

echo never > /sys/kernel/mm/transparent_hugepage/defrag

echo 0 > /sys/kernel/mm/transparent_hugepage/khugepaged/defrag

echo never > /sys/kernel/mm/transparent_hugepage/enabled

$ grep -r . /sys/kernel/mm/transparent_hugepage/*

/sys/kernel/mm/transparent_hugepage/defrag:always defer defer+madvise madvise [never]

/sys/kernel/mm/transparent_hugepage/enabled:always madvise [never]

/sys/kernel/mm/transparent_hugepage/hpage_pmd_size:2097152

/sys/kernel/mm/transparent_hugepage/khugepaged/defrag:0

/sys/kernel/mm/transparent_hugepage/khugepaged/max_ptes_shared:256

/sys/kernel/mm/transparent_hugepage/khugepaged/scan_sleep_millisecs:10000

/sys/kernel/mm/transparent_hugepage/khugepaged/max_ptes_none:511

/sys/kernel/mm/transparent_hugepage/khugepaged/pages_to_scan:4096

/sys/kernel/mm/transparent_hugepage/khugepaged/max_ptes_swap:64

/sys/kernel/mm/transparent_hugepage/khugepaged/alloc_sleep_millisecs:60000

/sys/kernel/mm/transparent_hugepage/khugepaged/pages_collapsed:0

/sys/kernel/mm/transparent_hugepage/khugepaged/full_scans:19

/sys/kernel/mm/transparent_hugepage/shmem_enabled:always within_size advise [never] deny force

/sys/kernel/mm/transparent_hugepage/use_zero_page:1

Asked by xpt

(1858 rep)

Nov 30, 2021, 11:19 PM

Last activity: Dec 15, 2023, 03:29 PM

Last activity: Dec 15, 2023, 03:29 PM