LVM Device-mapper (dm-2) 100% busy

2

votes

0

answers

769

views

We have set up LVM stripping across 06 disk in our DB server for improving IOPS performance.

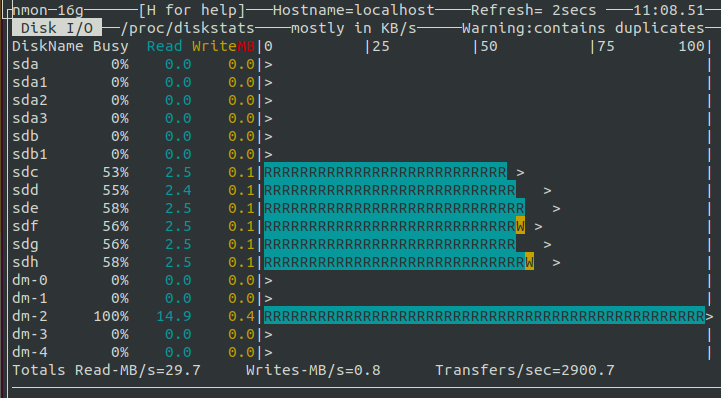

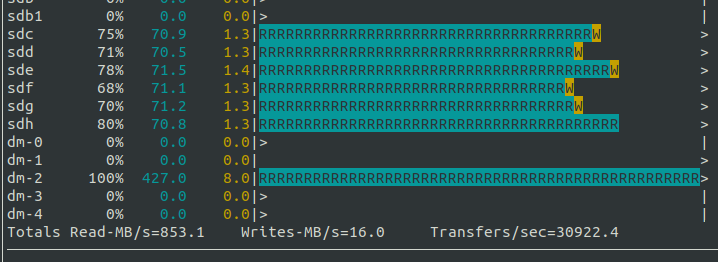

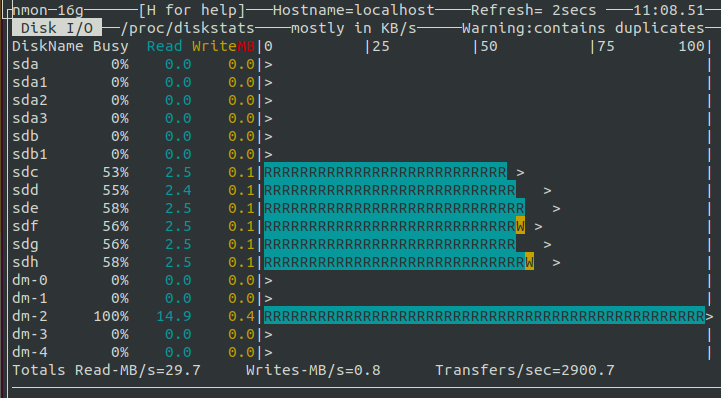

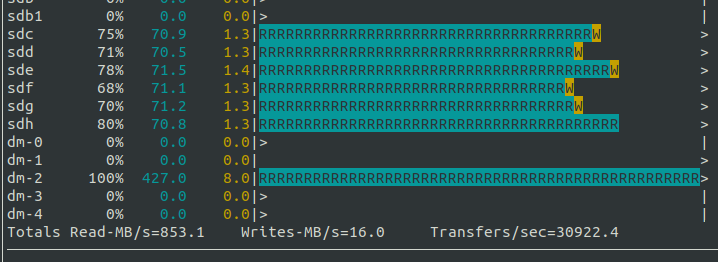

However during heavy load, it has been observed that device-mapper **dm-2** asscociated with these 6 disks is having 100% utlization (busy), but the associated disks **sd[c-h]** have only ~50% utilization.

Bcoz of the heavy load and 100% utilization, DB server, hence app performance also degrades.

Here is the screenshot of busy percentage:

Here is LV details:

Here is LV details:

Here is LV details:

Here is LV details:

--- Logical volume ---

LV Path /dev/vg/lg

LV Name lv

VG Name vg

LV UUID P**-***-***e

LV Write Access read/write

LV Creation host, time localhost.localdomain, 2022-12-02 20:32:38 +0000

LV Status available

# open 1

LV Size 13.08 TiB

Current LE 13715376

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:2

--- Segments ---

Logical extents 0 to 13715375:

Type striped

Stripes 6

Stripe size 16.00 KiB$ sudo stat -f /lvm-fs

File: "/home/.../lvm-fs"

ID: *** Namelen: 255 Type: xfs

Block size: 4096 Fundamental block size: 4096

Blocks: Total: 3510614400 Free: 2161893661 Available: 2161893661

Inodes: Total: 1404454400 Free: 1404453939$ sudo dd if=/dev/dm-2 of=/dev/null bs=1M count=102400

102400+0 records in

102400+0 records out

107374182400 bytes (107 GB) copied, 184.611 s, 582 MB/s65% and 85%, but never touched 100% whereas **dm-2** was always 100% busy as below:

Asked by curious

(21 rep)

May 1, 2023, 10:54 AM

Last activity: May 2, 2023, 06:58 AM

Last activity: May 2, 2023, 06:58 AM