I have a ZFS pool comprising a mirror comprising two partitions encypted with  Another interesting thing is that the operations rate on one disk is dropping during the scrub, but not the other. Maybe that's fine, I don't know whether the layout of data on a device in a

Another interesting thing is that the operations rate on one disk is dropping during the scrub, but not the other. Maybe that's fine, I don't know whether the layout of data on a device in a

dm-crypt.

$ zpool list -v data2

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

data2 3.6T 1.00T 2.6T - - 0% 27% 1.00x ONLINE -

mirror-0 3.6T 1.00T 2.6T - - 0% 27.5% - ONLINE

luks-aaaaaaaaaaaaaaaa 3.6T - - - - - - - ONLINE

luks-bbbbbbbbbbbbbbbb 3.6T - - - - - - - ONLINEiostat to monitor performance while scrubbing and noticed something funny about the IOPS figures:

$ iostat -dxy -N --human sda sdb dm-2 dm-3 10

[...]

Device r/s rkB/s rrqm/s %rrqm r_await rareq-sz w/s wkB/s wrqm/s %wrqm w_await wareq-sz d/s dkB/s drqm/s %drqm d_await dareq-sz f/s f_await aqu-sz %util

dm-2 1862.50 232.5M 0.00 0.0% 11.22 127.8k 11.00 159.2k 0.00 0.0% 1.23 14.5k 0.00 0.0k 0.00 0.0% 0.00 0.0k 0.00 0.00 20.92 99.7%

dm-3 1859.80 232.2M 0.00 0.0% 11.21 127.8k 11.20 159.2k 0.00 0.0% 1.39 14.2k 0.00 0.0k 0.00 0.0% 0.00 0.0k 0.00 0.00 20.86 99.3%

sda 468.10 232.5M 1394.10 74.9% 10.73 508.6k 11.00 159.2k 0.00 0.0% 1.08 14.5k 0.00 0.0k 0.00 0.0% 0.00 0.0k 0.40 8.75 5.04 81.5%

sdb 467.00 232.2M 1392.50 74.9% 10.70 509.1k 11.20 159.2k 0.00 0.0% 1.27 14.2k 0.00 0.0k 0.00 0.0% 0.00 0.0k 0.40 12.25 5.02 81.8%rkB/s (data read per second) match between each device mapper device and its underlying disk. This is as expected. But the r/s (reads per second) column looks rather strange...

If I understand correctly, I'm getting ~450 IOPS out of each disk. But are ~1800 IOPS recorded for each device mapper device! I'd have thought that reading a single disk block from the device mapper device would correspond to reading a single block from the underling device...

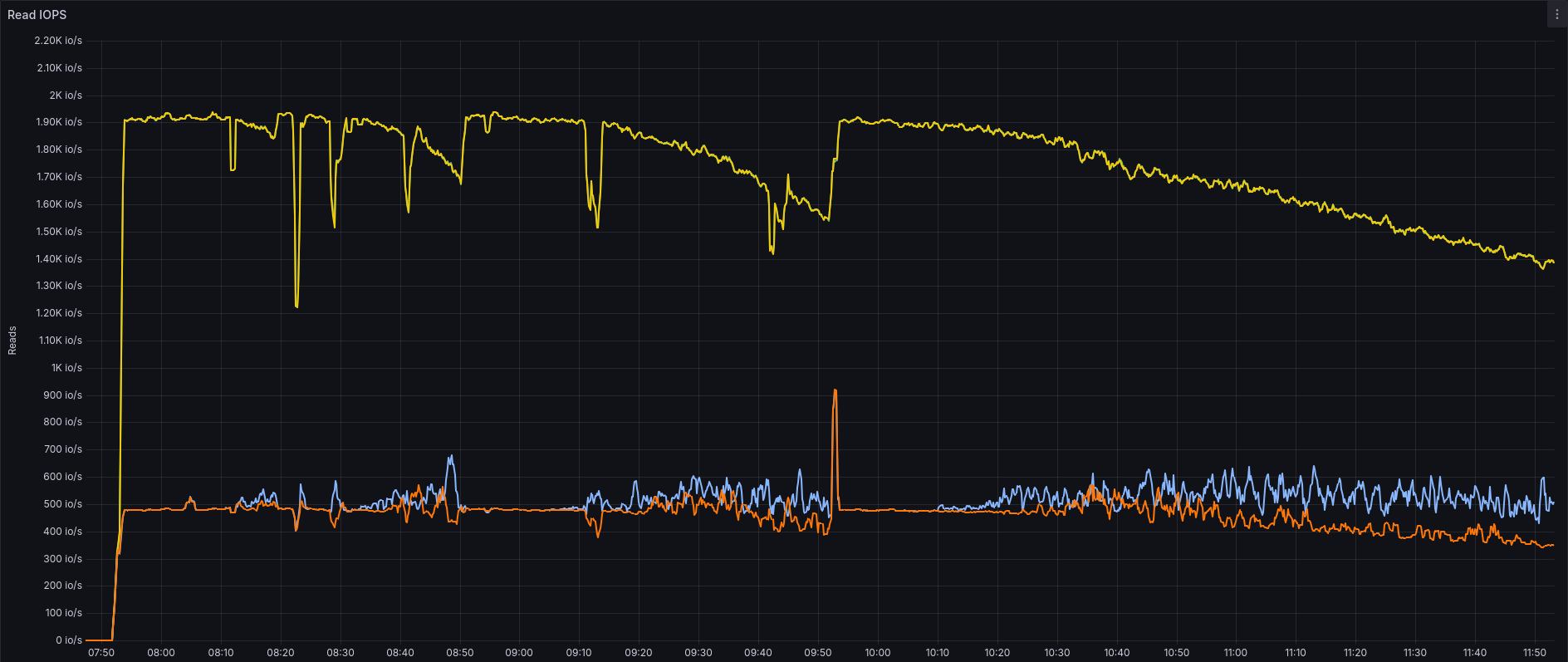

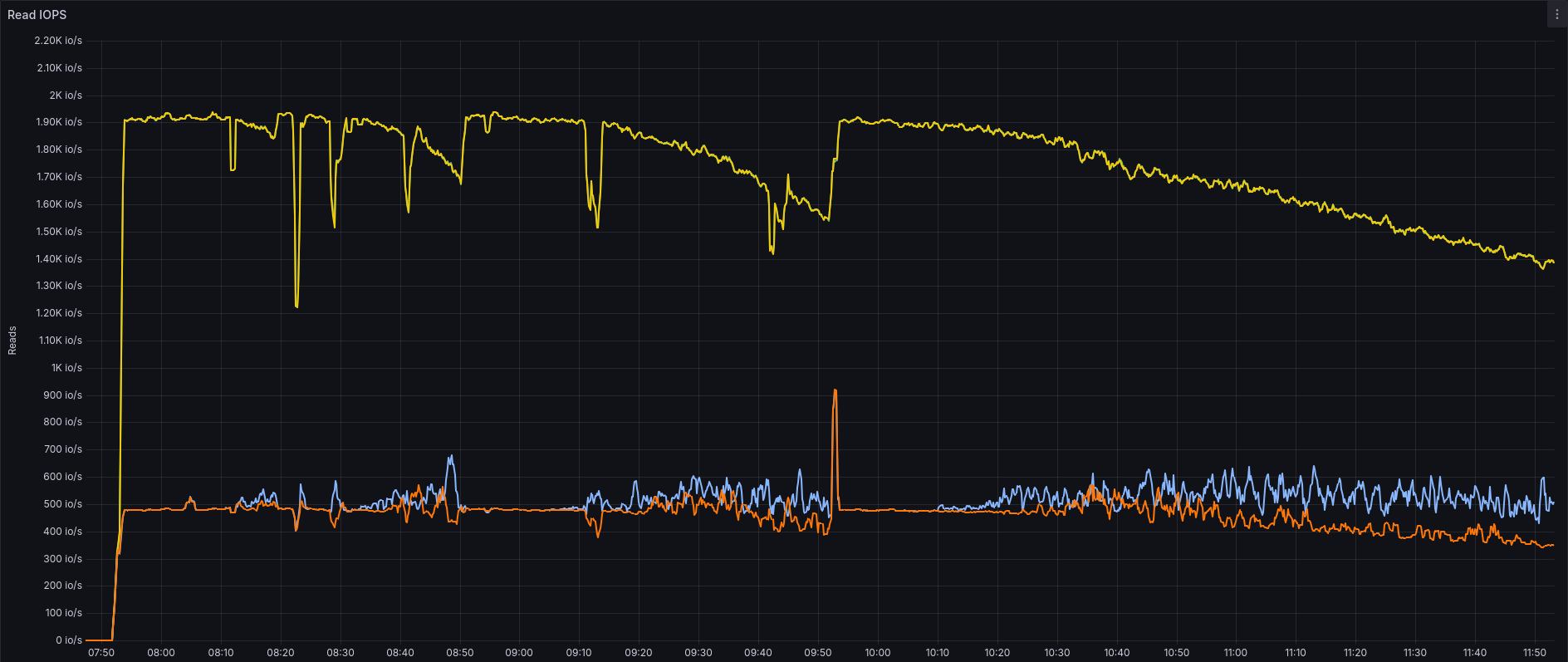

Here's a graph of the IOPS over time. Orange/Blue lines are the disks, Yellow/Green lines are the dm-crypt devices.

Another interesting thing is that the operations rate on one disk is dropping during the scrub, but not the other. Maybe that's fine, I don't know whether the layout of data on a device in a

Another interesting thing is that the operations rate on one disk is dropping during the scrub, but not the other. Maybe that's fine, I don't know whether the layout of data on a device in a mirror vdev is literally mirrored or whether each device can have data laid out differently. But the other weird thing is that the IOPS of both device mapper devices are idential, rather than one being some multiple of one disk, and the other the same multiple of the other...

My only idea is that this is something to do with differing physical sector sizes of the disks (4096), logical sizes (512) and the ZFS pool's ashift parameter (which I set to 12 to match the physical sector size of the disks).

But 1800 is ~4× 470, not 8×, so I don't see the direct relation between the two figures...

Asked by Sam Morris

(1355 rep)

Apr 17, 2025, 09:32 AM

Last activity: Apr 17, 2025, 11:03 AM

Last activity: Apr 17, 2025, 11:03 AM