Disabling overcommitting memory seems to cause allocs to fail too early, what could be the reason?

1

vote

1

answer

107

views

I tested out  I'm guessing it may not be intended the kernel keeps sitting on this dynamic memory of more than 6GiB forever without that being usable. Does anybody have an idea why it behaves like that with overcommitting disabled? Perhaps I'm missing something here.

**Update 2:**

Here's more information collected when hitting this weird condition again, where the dynamic kernel memory won't get out of the way:

I'm guessing it may not be intended the kernel keeps sitting on this dynamic memory of more than 6GiB forever without that being usable. Does anybody have an idea why it behaves like that with overcommitting disabled? Perhaps I'm missing something here.

**Update 2:**

Here's more information collected when hitting this weird condition again, where the dynamic kernel memory won't get out of the way:

echo 2 > /proc/sys/vm/overcommit_memory, which I know isn't a commonly used or recommended mode, but for various reasons it could be beneficial for some of my workloads.

However, when I tested this out on a desktop system with 15.6GiB RAM, with barely a quarter of memory used, most programs would already start crashing or erroring, and Brave would fail to open tabs:

$ dmesg

...

[24551.333140] __vm_enough_memory: pid: 19014, comm: brave, bytes: 268435456 not enough memory for the allocation

[24551.417579] __vm_enough_memory: pid: 19022, comm: brave, bytes: 268435456 not enough memory for the allocation

[24552.506934] __vm_enough_memory: pid: 19033, comm: brave, bytes: 268435456 not enough memory for the allocation

$ ./smem -tkw

Area Used Cache Noncache

firmware/hardware 0 0 0

kernel image 0 0 0

kernel dynamic memory 4.0G 3.5G 519.5M

userspace memory 3.4G 1.3G 2.1G

free memory 8.2G 8.2G 0

----------------------------------------------------------

15.6G 13.0G 2.7Gfork() instead of vfork() which many Linux suboptimally programs use, can cause issues once the process has more memory allocated. But it seems like this isn't the case here, since 1. the affected processes seem to at most use a few hundred megabytes of memory, and 2. the allocation listed in dmesg as failing is way smaller than what's listed as free, and 3. the overall system memory doesn't seem to be even a quarter filled up.

Some more system info:

# /sbin/sysctl vm.overcommit_ratio vm.overcommit_kbytes vm.admin_reserve_kbytes vm.user_reserve_kbytes

vm.overcommit_ratio = 50

vm.overcommit_kbytes = 0

vm.admin_reserve_kbytes = 8192

vm.user_reserve_kbytes = 131072echo 2 > /proc/sys/vm/overcommit_memory

echo 100 > /proc/sys/vm/overcommit_ratiofork() vs vfork() issue, but instead it seems to be once app memory usage reaches the dynamic kernel memory:

I'm guessing it may not be intended the kernel keeps sitting on this dynamic memory of more than 6GiB forever without that being usable. Does anybody have an idea why it behaves like that with overcommitting disabled? Perhaps I'm missing something here.

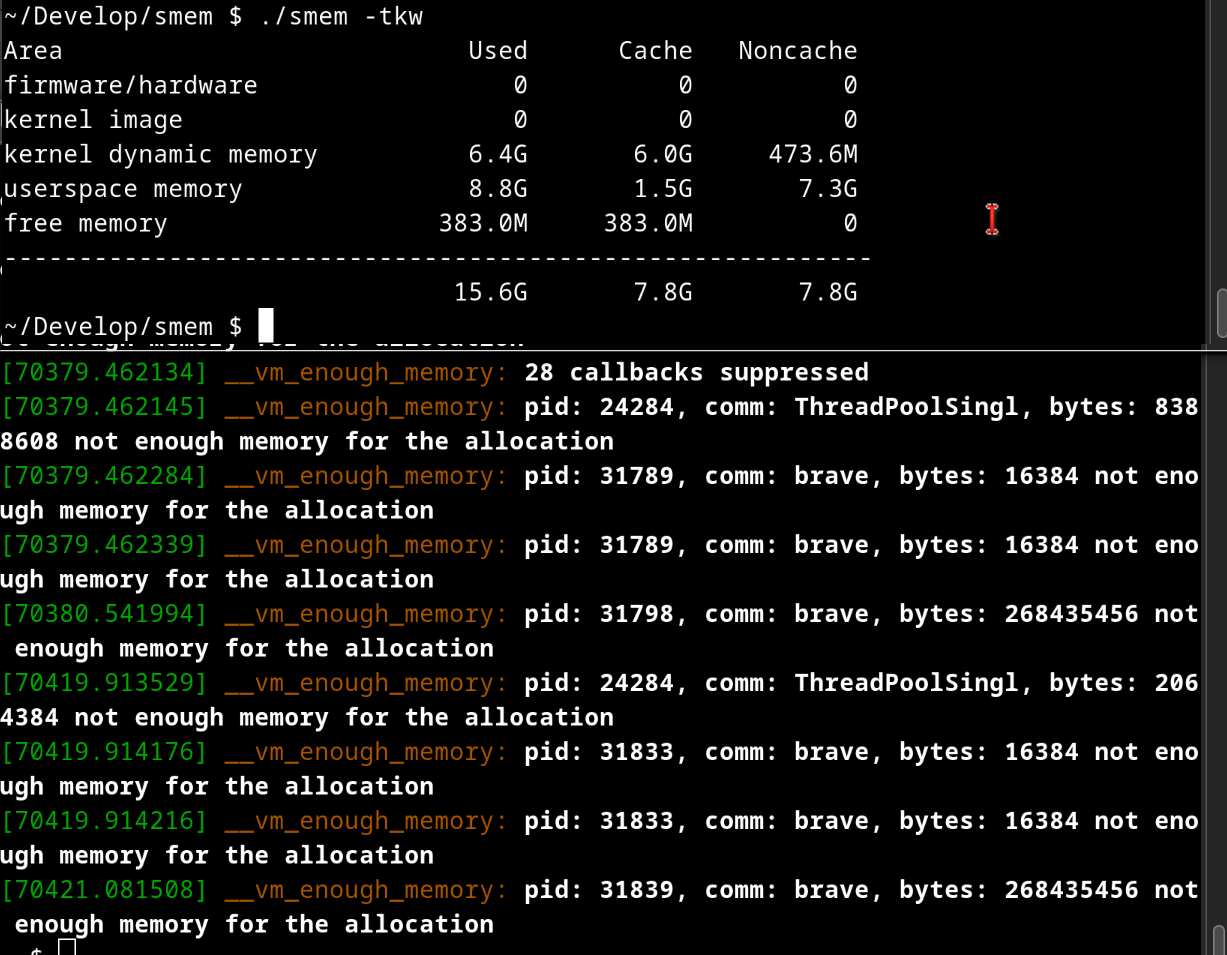

**Update 2:**

Here's more information collected when hitting this weird condition again, where the dynamic kernel memory won't get out of the way:

I'm guessing it may not be intended the kernel keeps sitting on this dynamic memory of more than 6GiB forever without that being usable. Does anybody have an idea why it behaves like that with overcommitting disabled? Perhaps I'm missing something here.

**Update 2:**

Here's more information collected when hitting this weird condition again, where the dynamic kernel memory won't get out of the way:

[32915.298484] __vm_enough_memory: pid: 24347, comm: brave, bytes: 268435456 not enough memory for the allocation

[32916.293690] __vm_enough_memory: pid: 24355, comm: brave, bytes: 268435456 not enough memory for the allocation

# exit

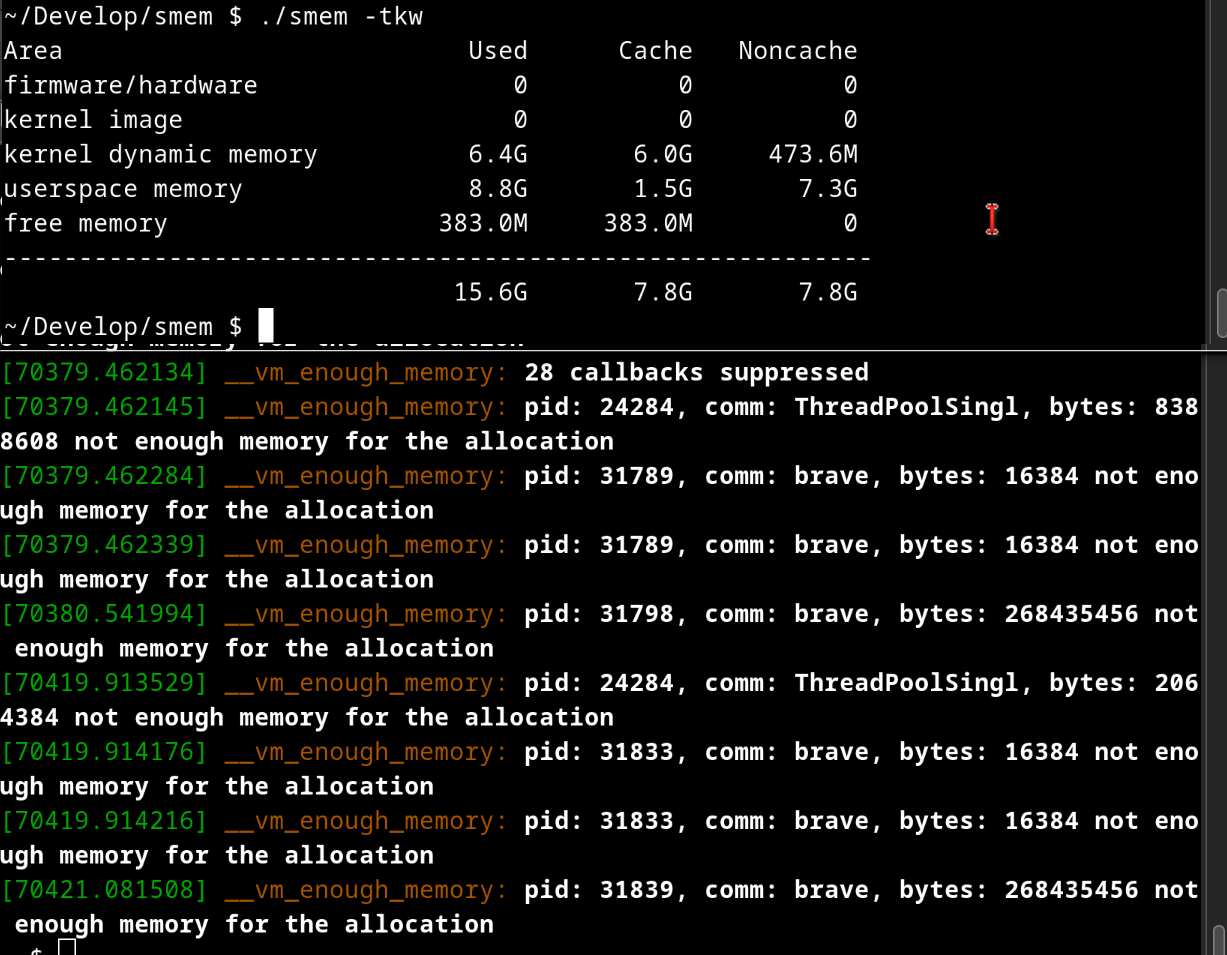

~/Develop/smem $ ./smem -tkw

Area Used Cache Noncache

firmware/hardware 0 0 0

kernel image 0 0 0

kernel dynamic memory 7.8G 7.4G 384.0M

userspace memory 5.2G 1.5G 3.7G

free memory 2.7G 2.7G 0

----------------------------------------------------------

15.6G 11.5G 4.1G

~/Develop/smem $ cat /proc/sys/vm/overcommit_ratio

100

~/Develop/smem $ cat /proc/sys/vm/overcommit_memory

2

~/Develop/smem $ cat /proc/meminfo

MemTotal: 16384932 kB

MemFree: 2803496 kB

MemAvailable: 10297132 kB

Buffers: 1796 kB

Cached: 8749580 kB

SwapCached: 0 kB

Active: 7032032 kB

Inactive: 4760088 kB

Active(anon): 4698776 kB

Inactive(anon): 0 kB

Active(file): 2333256 kB

Inactive(file): 4760088 kB

Unevictable: 825908 kB

Mlocked: 1192 kB

SwapTotal: 2097148 kB

SwapFree: 2097148 kB

Zswap: 0 kB

Zswapped: 0 kB

Dirty: 252 kB

Writeback: 0 kB

AnonPages: 3866720 kB

Mapped: 1520696 kB

Shmem: 1658104 kB

KReclaimable: 570808 kB

Slab: 743788 kB

SReclaimable: 570808 kB

SUnreclaim: 172980 kB

KernelStack: 18720 kB

PageTables: 53772 kB

SecPageTables: 0 kB

NFS_Unstable: 0 kB

Bounce: 0 kB

WritebackTmp: 0 kB

CommitLimit: 18482080 kB

Committed_AS: 17610184 kB

VmallocTotal: 261087232 kB

VmallocUsed: 86372 kB

VmallocChunk: 0 kB

Percpu: 864 kB

CmaTotal: 65536 kB

CmaFree: 608 kB

Asked by E. K.

(153 rep)

Jul 11, 2025, 04:59 AM

Last activity: Jul 13, 2025, 08:26 AM

Last activity: Jul 13, 2025, 08:26 AM