Ubuntu 18.04 VM in emergency/maintenance mode due to failed corrupted raided disk

4

votes

2

answers

2255

views

I have a VM which has an attached raided device with fstab entry:

/dev/md127 /mnt/blah ext4 nofail 0 2

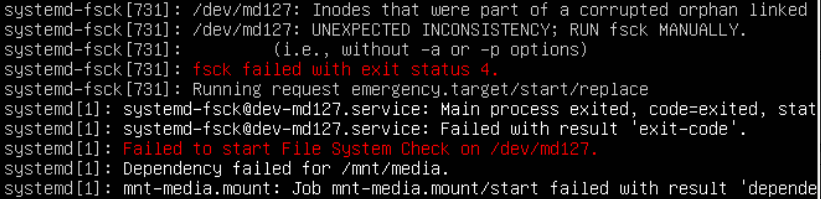

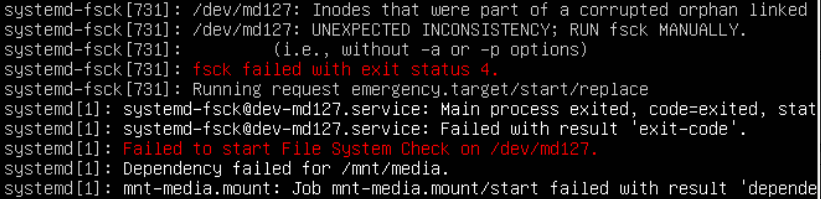

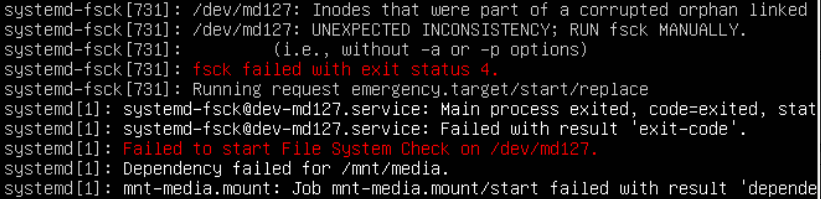

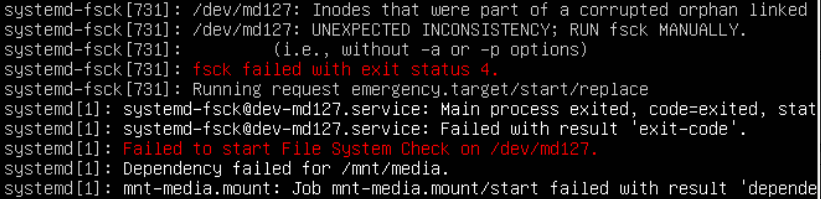

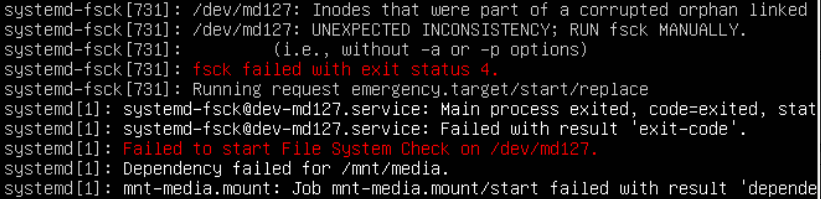

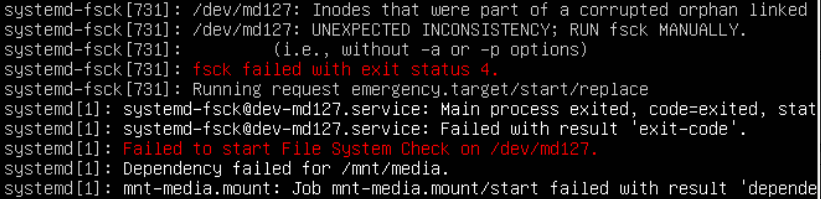

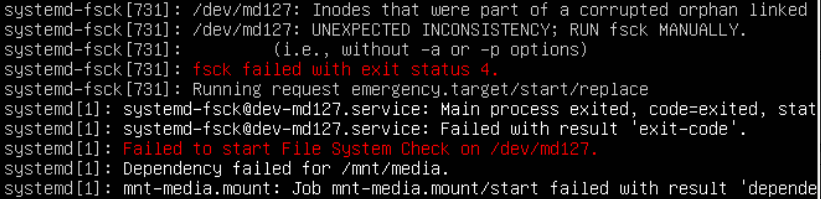

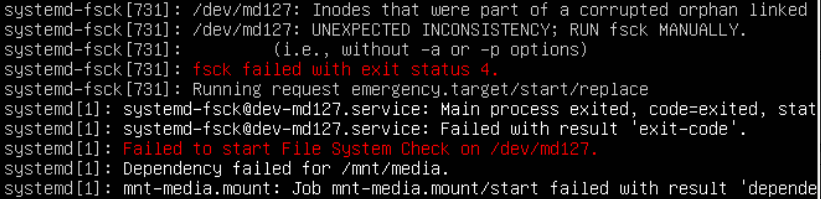

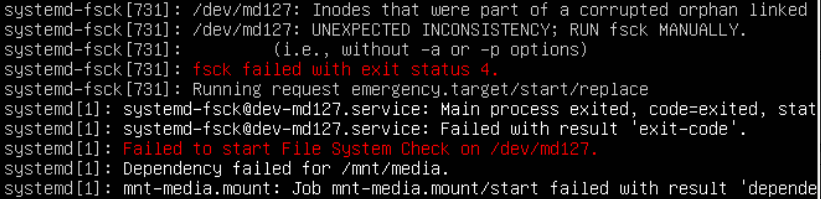

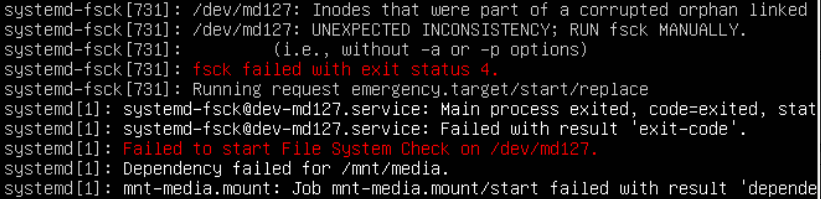

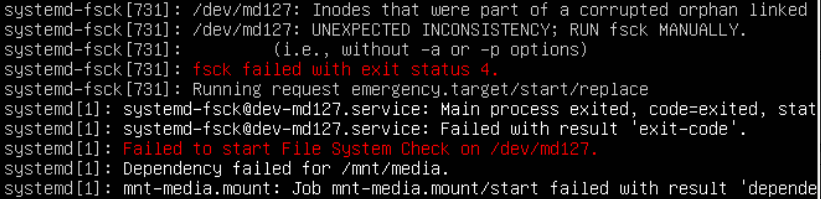

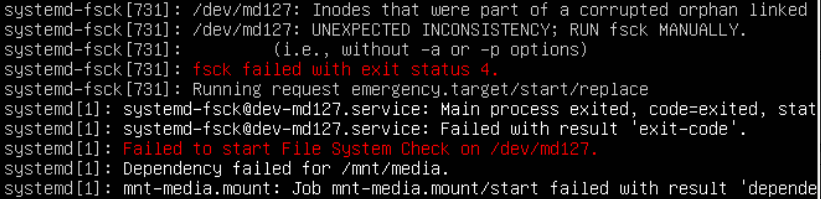

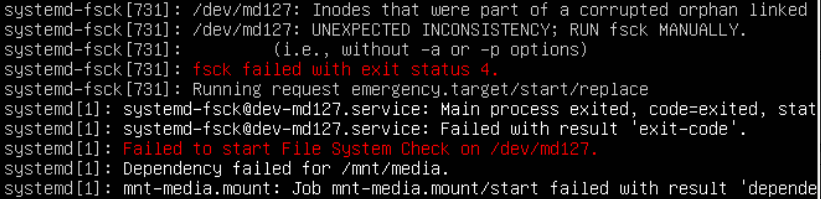

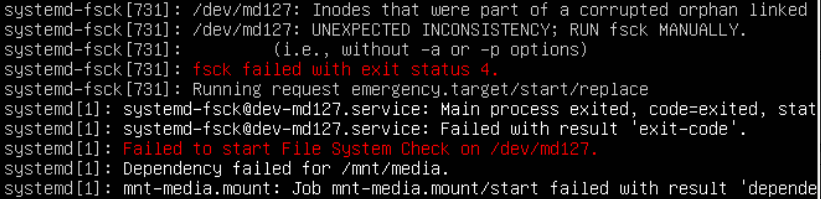

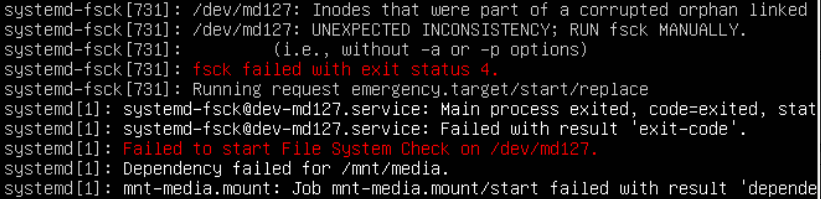

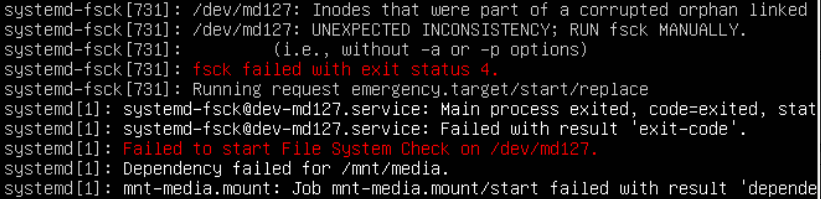

The raided disks are corrupted and during startup the unit entered emergency/maintence mode, which means only the local host user could exit this mode and start it up normally. During normal startup the following occurred in syslog:

systemd-fsck: /dev/md127 contains a file system with errors, check forced.

systemd-fsck: /dev/md127: Inodes that were part of a corrupted orphan linked list found.

systemd-fsck: /dev/md127: UNEXPECTED INCONSISTENCY; RUN fsck MANUALLY.

systemd-fsck: #011(i.e., without -a or -p options)

systemd-fsck: fsck failed with exit status 4.

systemd-fsck: Running request emergency.target/start/replace

systemd : systemd-fsck@dev-md127.service: Main process exited, code=exited, status=1/FAILURE

systemd

: systemd-fsck@dev-md127.service: Main process exited, code=exited, status=1/FAILURE

systemd : systemd-fsck@dev-md127.service: Failed with result 'exit-code'.

systemd

: systemd-fsck@dev-md127.service: Failed with result 'exit-code'.

systemd : Failed to start File System Check on /dev/md127.

systemd

: Failed to start File System Check on /dev/md127.

systemd : Dependency failed for /mnt/blah.

systemd

: Dependency failed for /mnt/blah.

systemd : Dependency failed for Provisioner client daemon.

My guess is that the OS goes to emergency/maintenance mode because of the corrupt raided disks:

systemctl --state=failed

UNIT LOAD ACTIVE SUB DESCRIPTION

● systemd-fsck@dev-md127.service loaded failed failed File System Check on /dev/md127

What i want is for the VM to startup regardless of whether the raided drives are corrupt/unmountable, so it shouldn't go to emergency/maintenance mode. I followed these posts to attempt at disabling emergency/maintenance mode:

- https://unix.stackexchange.com/questions/416640/how-to-disable-systemd-agressive-emergency-shell-behaviour

- https://unix.stackexchange.com/questions/326493/how-to-determine-exactly-why-systemd-enters-emergency-mode/393711#393711

- https://unix.stackexchange.com/questions/422319/emergency-mode-and-local-disk

I had to first create the directory

: Dependency failed for Provisioner client daemon.

My guess is that the OS goes to emergency/maintenance mode because of the corrupt raided disks:

systemctl --state=failed

UNIT LOAD ACTIVE SUB DESCRIPTION

● systemd-fsck@dev-md127.service loaded failed failed File System Check on /dev/md127

What i want is for the VM to startup regardless of whether the raided drives are corrupt/unmountable, so it shouldn't go to emergency/maintenance mode. I followed these posts to attempt at disabling emergency/maintenance mode:

- https://unix.stackexchange.com/questions/416640/how-to-disable-systemd-agressive-emergency-shell-behaviour

- https://unix.stackexchange.com/questions/326493/how-to-determine-exactly-why-systemd-enters-emergency-mode/393711#393711

- https://unix.stackexchange.com/questions/422319/emergency-mode-and-local-disk

I had to first create the directory  Here's the output from

Here's the output from

: systemd-fsck@dev-md127.service: Main process exited, code=exited, status=1/FAILURE

systemd

: systemd-fsck@dev-md127.service: Main process exited, code=exited, status=1/FAILURE

systemd : systemd-fsck@dev-md127.service: Failed with result 'exit-code'.

systemd

: systemd-fsck@dev-md127.service: Failed with result 'exit-code'.

systemd : Failed to start File System Check on /dev/md127.

systemd

: Failed to start File System Check on /dev/md127.

systemd : Dependency failed for /mnt/blah.

systemd

: Dependency failed for /mnt/blah.

systemd : Dependency failed for Provisioner client daemon.

My guess is that the OS goes to emergency/maintenance mode because of the corrupt raided disks:

systemctl --state=failed

UNIT LOAD ACTIVE SUB DESCRIPTION

● systemd-fsck@dev-md127.service loaded failed failed File System Check on /dev/md127

What i want is for the VM to startup regardless of whether the raided drives are corrupt/unmountable, so it shouldn't go to emergency/maintenance mode. I followed these posts to attempt at disabling emergency/maintenance mode:

- https://unix.stackexchange.com/questions/416640/how-to-disable-systemd-agressive-emergency-shell-behaviour

- https://unix.stackexchange.com/questions/326493/how-to-determine-exactly-why-systemd-enters-emergency-mode/393711#393711

- https://unix.stackexchange.com/questions/422319/emergency-mode-and-local-disk

I had to first create the directory

: Dependency failed for Provisioner client daemon.

My guess is that the OS goes to emergency/maintenance mode because of the corrupt raided disks:

systemctl --state=failed

UNIT LOAD ACTIVE SUB DESCRIPTION

● systemd-fsck@dev-md127.service loaded failed failed File System Check on /dev/md127

What i want is for the VM to startup regardless of whether the raided drives are corrupt/unmountable, so it shouldn't go to emergency/maintenance mode. I followed these posts to attempt at disabling emergency/maintenance mode:

- https://unix.stackexchange.com/questions/416640/how-to-disable-systemd-agressive-emergency-shell-behaviour

- https://unix.stackexchange.com/questions/326493/how-to-determine-exactly-why-systemd-enters-emergency-mode/393711#393711

- https://unix.stackexchange.com/questions/422319/emergency-mode-and-local-disk

I had to first create the directory local-fs.target.d in /etc/systemd/system/, which felt wrong. I then created a nofail.conf in /etc/systemd/system/local-fs.target.d/nofail.conf containing:

[Unit]

OnFailure=

After loading that drop file, I was able to confirm that the drop file was found by local-fs.target:

sudo systemctl status local-fs.target

● local-fs.target - Local File Systems

Loaded: loaded (/lib/systemd/system/local-fs.target; static; vendor preset: enabled)

Drop-In: /etc/systemd/system/local-fs.target.d

└─nofail.conf

Active: active since Tue 2019-01-08 12:36:41 UTC; 3h 55min ago

Docs: man:systemd.special(7)

BUT, after rebooting, the VM still ended up in emergency/maintenance mode. Have i missed something? Does the nofail.conf solution not work with raided disks?

----------

EDIT: I was able to get a print out of the logs when the system booted to emergency mode (sorry it's a screenshot since i don't have access to the host and had to ask the owner for it):

Here's the output from

Here's the output from systemctl for systemd-fsck@dev-md127:

sudo systemctl status --no-pager --full systemd-fsck@dev-md127

● systemd-fsck@dev-md127.service - File System Check on /dev/md127

Loaded: loaded (/lib/systemd/system/systemd-fsck@.service; static; vendor preset: enabled)

Active: failed (Result: exit-code) since Thu 2019-01-10 12:05:44 UTC; 2h 57min ago

Docs: man:systemd-fsck@.service(8)

Process: 1025 ExecStart=/lib/systemd/systemd-fsck /dev/md127 (code=exited, status=1/FAILURE)

Main PID: 1025 (code=exited, status=1/FAILURE)

systemd : Starting File System Check on /dev/md127...

systemd-fsck: /dev/md127 contains a file system with errors, check forced.

systemd-fsck: /dev/md127: Inodes that were part of a corrupted orphan linked list found.

systemd-fsck: /dev/md127: UNEXPECTED INCONSISTENCY; RUN fsck MANUALLY.

systemd-fsck: (i.e., without -a or -p options)

systemd-fsck: fsck failed with exit status 4.

systemd-fsck: Running request emergency.target/start/replace

systemd

: Starting File System Check on /dev/md127...

systemd-fsck: /dev/md127 contains a file system with errors, check forced.

systemd-fsck: /dev/md127: Inodes that were part of a corrupted orphan linked list found.

systemd-fsck: /dev/md127: UNEXPECTED INCONSISTENCY; RUN fsck MANUALLY.

systemd-fsck: (i.e., without -a or -p options)

systemd-fsck: fsck failed with exit status 4.

systemd-fsck: Running request emergency.target/start/replace

systemd : systemd-fsck@dev-md127.service: Main process exited, code=exited, status=1/FAILURE

systemd

: systemd-fsck@dev-md127.service: Main process exited, code=exited, status=1/FAILURE

systemd : systemd-fsck@dev-md127.service: Failed with result 'exit-code'.

systemd

: systemd-fsck@dev-md127.service: Failed with result 'exit-code'.

systemd : Failed to start File System Check on /dev/md127.

: Failed to start File System Check on /dev/md127.nofail set in /etc/fstab. Now the questions are:

1. What is the dependency in the failed dependency in the screenshot?

2. If fsck fails on /dev/md127, why does it enter emergency mode and how do i disable that?

EDIT 2:

A couple of other things i can add are:

1. the vm is a kvm vm

2. it's a software raid

Kind regards,

Ankur

Asked by Ankur22

(41 rep)

Jan 8, 2019, 04:39 PM

Last activity: Jul 4, 2025, 11:09 AM

Last activity: Jul 4, 2025, 11:09 AM